导图社区 DAMA DMBOK2.0全知识点总结(第13-17章 数据质量 大数据和数据科学 数据管理成熟度评估 数据管理组织与角色期望 数据管理和组织变革管理)

- 315

- 1

- 1

- 举报

DAMA DMBOK2.0全知识点总结(第13-17章 数据质量 大数据和数据科学 数据管理成熟度评估 数据管理组织与角色期望 数据管理和组织变革管理)

CDMP,全称Certified for Data Management Professional,即数据管理专业人士认证,由数据管理国际协会DAMA International建立,是一项涵盖学历教育、工作经验和专业知识考试在内的综合认证。 总结了CDMP英文考试的所有知识点,考点,以及历史真题。 适用于从事数据管理,数据治理,数字转型等方面的高级职业认证。 章节和知识点较多,因此分章节和完成时间分发。 (第1-3章 数字管理 数字伦理 数字治理) (第4-6章 数据架构 数据建模和设计 数据存储和操作) (第7-9章 数据安全 数据集成和互操作 文件和内容管理) (第10-12章 参考数据和主数据 数据仓库和商务智能 元数据管理) (第13-17章 数据质量 大数据和数据科学 数据管理成熟度评估 数据管理组织与角色期望 数据管理和组织变革管理) 考证 CDMP 数据管理 DMBOK 数字化转型 DAMA 数字化 数据管理专家

编辑于2023-03-29 13:09:58 广东- CDMP

- DMBOK

- 数据管理专家

- DAMA DMBOK2.0全知识点总结(第13-17章 数据质量 大数据和数据科学 数据管理成熟度评估 数据管理组织与角色期望 数据管理和组织变革管理)

CDMP,全称Certified for Data Management Professional,即数据管理专业人士认证,由数据管理国际协会DAMA International建立,是一项涵盖学历教育、工作经验和专业知识考试在内的综合认证。 总结了CDMP英文考试的所有知识点,考点,以及历史真题。 适用于从事数据管理,数据治理,数字转型等方面的高级职业认证。 章节和知识点较多,因此分章节和完成时间分发。 (第1-3章 数字管理 数字伦理 数字治理) (第4-6章 数据架构 数据建模和设计 数据存储和操作) (第7-9章 数据安全 数据集成和互操作 文件和内容管理) (第10-12章 参考数据和主数据 数据仓库和商务智能 元数据管理) (第13-17章 数据质量 大数据和数据科学 数据管理成熟度评估 数据管理组织与角色期望 数据管理和组织变革管理) 考证 CDMP 数据管理 DMBOK 数字化转型 DAMA 数字化 数据管理专家

- DAMA DMBOK2.0全知识点总结(第10-12章 参考数据和主数据 数据仓库和商务智能 元数据管理)

CDMP,全称Certified for Data Management Professional,即数据管理专业人士认证,由数据管理国际协会DAMA International建立,是一项涵盖学历教育、工作经验和专业知识考试在内的综合认证。 总结了CDMP英文考试的所有知识点,考点,以及历史真题。 适用于从事数据管理,数据治理,数字转型等方面的高级职业认证。 章节和知识点较多,因此分章节和完成时间分发。 (第1-3章 数字管理 数字伦理 数字治理) (第4-6章 数据架构 数据建模和设计 数据存储和操作) (第7-9章 数据安全 数据集成和互操作 文件和内容管理) (第10-12章 参考数据和主数据 数据仓库和商务智能 元数据管理) (第13-17章 数据质量 大数据和数据科学 数据管理成熟度评估 数据管理组织与角色期望 数据管理和组织变革管理) 考证 CDMP 数据管理 DMBOK 数字化转型 DAMA 数字化 数据管理专家

- DAMA DMBOK2.0全知识点总结(第7-9章 数据安全 数据集成和互操作 文件和内容管理)

CDMP,全称Certified for Data Management Professional,即数据管理专业人士认证,由数据管理国际协会DAMA International建立,是一项涵盖学历教育、工作经验和专业知识考试在内的综合认证。 总结了CDMP英文考试的所有知识点,考点,以及历史真题。 适用于从事数据管理,数据治理,数字转型等方面的高级职业认证。 章节和知识点较多,因此分章节和完成时间分发。 1-3章 4-6章 7-9章 10-12章 13-17章 考证 CDMP 数据管理 DMBOK 数字化转型 DAMA 数字化 数据管理专家

DAMA DMBOK2.0全知识点总结(第13-17章 数据质量 大数据和数据科学 数据管理成熟度评估 数据管理组织与角色期望 数据管理和组织变革管理)

社区模板帮助中心,点此进入>>

- DAMA DMBOK2.0全知识点总结(第13-17章 数据质量 大数据和数据科学 数据管理成熟度评估 数据管理组织与角色期望 数据管理和组织变革管理)

CDMP,全称Certified for Data Management Professional,即数据管理专业人士认证,由数据管理国际协会DAMA International建立,是一项涵盖学历教育、工作经验和专业知识考试在内的综合认证。 总结了CDMP英文考试的所有知识点,考点,以及历史真题。 适用于从事数据管理,数据治理,数字转型等方面的高级职业认证。 章节和知识点较多,因此分章节和完成时间分发。 (第1-3章 数字管理 数字伦理 数字治理) (第4-6章 数据架构 数据建模和设计 数据存储和操作) (第7-9章 数据安全 数据集成和互操作 文件和内容管理) (第10-12章 参考数据和主数据 数据仓库和商务智能 元数据管理) (第13-17章 数据质量 大数据和数据科学 数据管理成熟度评估 数据管理组织与角色期望 数据管理和组织变革管理) 考证 CDMP 数据管理 DMBOK 数字化转型 DAMA 数字化 数据管理专家

- DAMA DMBOK2.0全知识点总结(第10-12章 参考数据和主数据 数据仓库和商务智能 元数据管理)

CDMP,全称Certified for Data Management Professional,即数据管理专业人士认证,由数据管理国际协会DAMA International建立,是一项涵盖学历教育、工作经验和专业知识考试在内的综合认证。 总结了CDMP英文考试的所有知识点,考点,以及历史真题。 适用于从事数据管理,数据治理,数字转型等方面的高级职业认证。 章节和知识点较多,因此分章节和完成时间分发。 (第1-3章 数字管理 数字伦理 数字治理) (第4-6章 数据架构 数据建模和设计 数据存储和操作) (第7-9章 数据安全 数据集成和互操作 文件和内容管理) (第10-12章 参考数据和主数据 数据仓库和商务智能 元数据管理) (第13-17章 数据质量 大数据和数据科学 数据管理成熟度评估 数据管理组织与角色期望 数据管理和组织变革管理) 考证 CDMP 数据管理 DMBOK 数字化转型 DAMA 数字化 数据管理专家

- DAMA DMBOK2.0全知识点总结(第7-9章 数据安全 数据集成和互操作 文件和内容管理)

CDMP,全称Certified for Data Management Professional,即数据管理专业人士认证,由数据管理国际协会DAMA International建立,是一项涵盖学历教育、工作经验和专业知识考试在内的综合认证。 总结了CDMP英文考试的所有知识点,考点,以及历史真题。 适用于从事数据管理,数据治理,数字转型等方面的高级职业认证。 章节和知识点较多,因此分章节和完成时间分发。 1-3章 4-6章 7-9章 10-12章 13-17章 考证 CDMP 数据管理 DMBOK 数字化转型 DAMA 数字化 数据管理专家

- 相似推荐

- 大纲

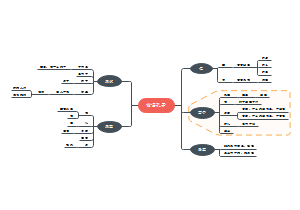

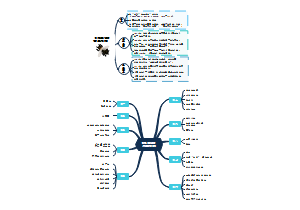

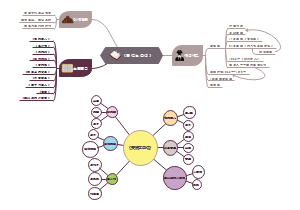

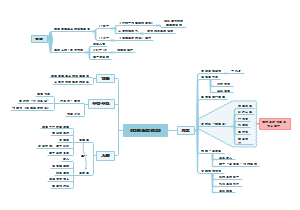

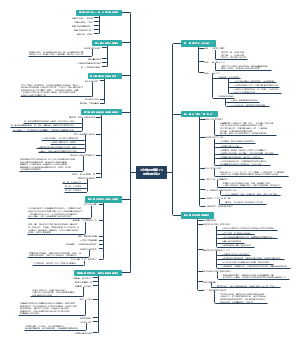

DAMA知识点 第13-17章

Chapter 13: Data Quality 数据质量

1. Introduction

1.1. Define

1.1.1. An assumption underlying assertions about the value of data is that the data itself is reliable and trustworthy. In other words, that it is of high quality.

1.1.2. As is the case with Data Governance and with data management as a whole, Data Quality Management is a program, not a project.

It will include both project and maintenance work, along with a commitment to communications and training.

1.1.3. Most importantly, the long-term success of data quality improvement program depends on getting an organization to change its culture and adopt a quality mindset.

24. which of these statements is true? A:Data Quality Management is a synonym for Data Governance B:Data Quality Management only addresses structured data C:Data Quality Management is the application of technology to data problems Answered D:Data Quality Management is usually a one-off project E:Data Quality Management is a continuous process 正确答案:E 你的答案:E 解析:13.1:与数据治理和整体数据管理一样,数据质量管理不是一个项目,而是一项持续性工作。它包括项目和维护工作,以及承诺进行沟通和培训。

1.2. Business Drivers

1.2.1. The business drivers for establishing a formal Data Quality Management program include:

1. Increasing the value of organizational data and the opportunities to use it

2. Reducing risks and costs associated with poor quality data

3. Improving organizational efficiency and productivity

4. Protecting and enhancing the organization’s reputation

5. 6. which of the following is NOT an objective of information quality improvement? A:Agile design B:Improve the reliability of business decisions C:customer satisfaction D:Everyone takes responsibility for data stewardship E:All 正确答案:A 你的答案:A 解析:A无关。13.1.1业务驱动因素建立正式数据质量管理的业务驱动因素包括:1)提高组织数据价值和数据利用的机会。2)降低低质量数据导致的风险和成本。3)提高组织效率和生产力。4)保护和提高组织的声誉

1.2.2. In addition, many direct costs are associated with poor quality data. For example,

1. Inability to invoice correctly

2. Increased customer service calls and decreased ability to resolve them

3. Revenue loss due to missed business opportunities

4. Delay of integration during mergers and acquisitions

5. Increased exposure to fraud

6. Loss due to bad business decisions driven by bad data

7. Loss of business due to lack of good credit standing

1.2.3. Still high quality data is not an end in itself. It is a means to organizational success.

1.3. Goals and Principles

1.3.1. Data Quality programs focus on these general goals:

1. Developing a governed approach to make data fit for purpose based on data consumers’ requirements

2. Defining standards and specifications for data quality controls as part of the data lifecycle

3. Defining and implementing processes to measure, monitor, and report on data quality levels

4. Identifying and advocating for opportunities to improve the quality of data, through changes to processes and systems and engaging in activities that measurably improve the quality of data based on data consumer requirements

1.3.2. Data Quality programs should be guided by the following principles:

1. Criticality 重要性:

A Data Quality program should focus on the data most critical to the enterprise and its customers. Priorities for improvement should be based on the criticality of the data and on the level ofrisk if data is not correct.

2. Lifecycle management 生命周期管理:

The quality of data should be managed across the data lifecycle, from creation or procurement through disposal. This includes managing data as it moves within and betweensystems (i.e., each link in the data chain should ensure data output is of high quality).

3. Prevention 预防:

The focus of a Data Quality program should be on preventing data errors and conditionsthat reduce the usability of data; it should not be focused on simply correcting records.

4. Root cause remediation: 根因修正

Improving the quality of data goes beyond correcting errors. Problems withthe quality of data should be understood and addressed at their root causes, rather than just theirsymptoms. Because these causes are often related to process or system design, improving data qualityoften requires changes to processes and the systems that support them.

5. Governance 治理配套:

Data Governance activities must support the development of high quality data and DataQuality program activities must support and sustain a governed data environment.

6. Standards-driven 标准驱动:

All stakeholders in the data lifecycle have data quality requirements. To the degreepossible, these requirements should be defined in the form of measurable standards and expectations against which the quality of data can be measured.

7. Objective measurement and transparency 客观测量和透明度:

Data quality levels need to be measured objectively and consistently. Measurements and measurement methodology should be shared with stakeholders sincethey are the arbiters of quality.

8. Embedded in business processes 嵌入业务流程:

Business process owners are responsible for the quality of data produced through their processes. They must enforce data quality standards in their processes.

9. Systematically enforced 系统强制执行:

System owners must systematically enforce data quality requirements.

10. Connected to service levels 与SLA关联:

Data quality reporting and issues management should be incorporated into Service Level Agreements (SLA).

1.4. Essential Concepts

1.4.1. Data Quality 数据质量

The term data quality refers both to the characteristics associated with high quality data and to the processes used to measure or improve the quality of data.

Data is of high quality to the degree that it meets the expectations and needs of data consumers. That is, if the data is fit for the purposes to which they want to apply it. It is of low quality if it is not fit for those purposes.

Data quality is thus dependent on context and on the needs of the data consumer.

One of the challenges in managing the quality of data is that expectations related to quality are not always known. Customers may not articulate them. Often, the people managing data do not even ask about these requirements.

This needs to be an ongoing discussion, as requirements change over time as business needs and external forces evolve.

1.4.2. Critical Data 关键数据

Most organizations have a lot of data, not all of which is of equal importance.

One principle of Data Quality Management is to focus improvement efforts on data that is most important to the organization and its customers. Doing so gives the program scope and focus and enables it to make a direct, measurable impact on business needs.

While specific drivers for criticality will differ by industry, there are common characteristics across organizations. Data can be assessed based on whether it is required by:

1. Regulatory reporting 监管报告

2. Financial reporting 财务报告

3. Business policy 商业政策

4. Ongoing operations 持续经营

5. Business strategy, especially efforts at competitive differentiation 商业战略,尤其是差异化

银行数据

ESG数据

1.4.3. Data Quality Dimensions 数据质量维度

A Data Quality dimension is a measurable feature or characteristic of data.

35. A Data Quality dimension is : A:a core concept in dimensional modelling B:a valid value in a list. C:a measurable feature or characteristic of data D:one aspect of data quality used extensively in data governance. E:the value of a particular piece of data 正确答案:C 你的答案:C 解析:13.1.3:3.数据质量维度数据质量维度是数据的某个可测量的特性。

Many leading thinkers in data quality have published sets of dimensions

1. The Strong-Wang framework (1996) focuses on data consumers’ perceptions of data. It describes 15 dimensions across four general categories of data quality:

Intrinsic DQ 内在的

Accuracy

Objectivity

Believability

Reputation

Contextual DQ 场景的

Value-added

Relevancy

Timeliness 及时的

Completeness

Appropriate amount of data 适量

Representational DQ 表达的

Interpretability

Ease of understanding

Representational consistency

Concise 简洁 representation

Accessibility DQ 访问

Accessibility

Access security

2. In Data Quality for the Information Age (1996), Thomas Redman formulated a set of data quality dimension rooted in data structure. Within these three general categories (data model, data values, representation), he describes more than two dozen dimensions. They include the following:

Data Model:

Content:

Relevance of data

The ability to obtain the values

Clarity of definitions

Level of detail:

Attribute granularity 颗粒度

Precision of attribute domains

Composition:

Naturalness 自然性: The idea that each attribute should have a simple counterpart in the real worldand that each attribute should bear on a single fact about the entity

Identify-ability 可识别性: Each entity should be distinguishable from every other entity

Homogeneity 同一性

Minimum necessary redundancy

Consistency:

Semantic consistency of the components of the model

Structure consistency of attributes across entity types

Reaction to change:

Robustness 健壮性

Flexibility

Data Values:

Accuracy

Completeness

Currency 时效性

Consistency

Representation:

Appropriateness

Interpretability 可解释性

Portability 可移植性

Format precision

Format flexibility

Ability to represent null values

Efficient use of storage

Physical instances of data being in accord with their formats

3. In Improving Data Warehouse and Business Information Quality (1999), Larry English presents a comprehensive set of dimensions divided into two broad categories: inherent and pragmatic. Inherent characteristics are independent of data use. Pragmatic characteristics are associated with data presentation and are dynamic; their value (quality) can change depending on the uses of data.

Inherent quality characteristics 固有质量特征

1. Definitional conformance

2. Completeness of values

3. Validity or business rule conformance

4. Accuracy to a surrogate source

5. Accuracy to reality

6. Precision

10. A characteristic of information quality that measures the degree of the data granularity is known A:aggregation B:precision 精确性 C:scale D:data set E:data set 正确答案:B 你的答案:D 解析:题解:Larry English,分为两大类别:固有特征和实用特征。固有特征与数据使用无关,实用特征是动态5)反映现实的准确性。6)精确性。

7. Non-duplication

8. Equivalence 等效性 of redundant or distributed data

9. Concurrency 并发性 of redundant or distributed data

Pragmatic quality characteristics

Accessibility

Timeliness

Contextual clarity

Usability

Derivation integrity 多源可整合性

Rightness or fact completeness

4. In 2013, DAMA UK produced a white paper describing six core dimensions of data quality:

1. Completeness: The proportion of data stored against the potential for 100%.

2. Uniqueness: No entity instance (thing) will be recorded more than once based upon how that thing isidentified.

3. Timeliness: The degree to which data represent reality from the required point in time.

4. Validity: Data is valid if it conforms to the syntax (format, type, range) of its definition.

5. Accuracy: The degree to which data correctly describes the ‘real world’ object or event beingdescribed.

6. Consistency: The absence of difference, when comparing two or more representations of a thingagainst a definition.

7. Usability: Is the data understandable, simple, relevant, accessible, maintainable and at the right levelof precision?

8. Timing issues (beyond timeliness itself): Is it stable yet responsive to legitimate change requests?

9. Flexibility: Is the data comparable and compatible with other data? Does it have useful groupings andclassifications? Can it be repurposed? Is it easy to manipulate?

10. Confidence: Are Data Governance, Data Protection, and Data Security processes in place? What is thereputation of the data, and is it verified or verifiable?

11. Value: Is there a good cost / benefit case for the data? Is it being optimally used? Does it endangerpeople’s safety or privacy, or the legal responsibilities of the enterprise? Does it support or contradictthe corporate image or the corporate message?

Common Dimensions of Data Quality

1. Accuracy 准确性

Accuracy refers to the degree that data correctly represents ‘real-life’ entities.

2. Completeness 完整性

Completeness refers to whether all required data is present. Completeness can be measured at the data set, record, or column level.

8. A measure of information quality completeness is A:an assessment of the percent of records having a non-null value for a specific field 完整性 B:an assessment of having the right level of granularity in the data values C:that a data value is from the correct domain of values for a field D:the degree of conformance 一致性 of data values to its domain E:All 正确答案:A 你的答案:D 解析:13.1.3:2013年,DAMA UK发布了一份白皮书,描述了数据质量的6个核心维度:1)完备性。存储数据量与潜在数据量的百分比

3. Consistency 一致性

Consistency can refer to ensuring that data values are consistently represented within a data set and between data sets, and consistently associated across data sets.

18. which of the following is the best example of the data quality dimension of 'consistency": A:The revenue data in the dataset is always $100 out B:The customer file has 50% duplicated entries C:All the records in the CRM have been accounted for in the data warehouse D:The phone numbers in the customer file do not adhere to the standard format E:The source data for the end of month report arrived 1 week late 正确答案:C 你的答案:E 解析13.1.3:6)一致性。比较事物多种表述与定义的差异。

4. Integrity 完整性

Data Integrity (or Coherence) includes ideas associated with completeness, accuracy, and consistency.

5. Reasonability 合理性

Reasonability asks whether a data pattern meets expectations.

6. Timeliness 及时性

Data currency is the measure of whether data values are the most up-to-date version of the information.

7. Uniqueness / Deduplication 唯一性

Uniqueness states that no entity exists more than once within the data set.

2. For the product lD in the Product table,what information quality measure would be MOST appropriate? A:Official definition B:Uniqueness C:validity D:Duplicate occurrences E:Accuracy 正确答案:B 你的答案:B 解析:13.1.3:2)唯一性。在满足对象识别的基础上不应多次记录实体实例(事物)。

23. Which of these is a key process in defining data quality business rules? A:Producing data quality reports dashboards B:Matching data from different data sources Answered C:Producing data management policies D:De-duplicating data records 去重 E:Separating data that does not meet business needs from data that does 正确答案:D 你的答案:E 解析:唯一性

8. Validity 有效性

Validity refers to whether data values are consistent with a defined domain of values.

Dimensions include some characteristics that can be measured objectively (completeness, validity, format conformity) and others that depend on heavily context or on subjective interpretation (usability, reliability, reputation).

1.4.4. Data Quality and Metadata 数据质量和元数据

Metadata is critical to managing the quality of data. The quality of data is based on how well it meets the requirements of data consumers. Metadata defines what the data represents.

Having a robust process by which data is defined supports the ability of an organization to formalize and document the standards and requirements by which the quality of data can be measured. Data quality is about meeting expectations. Metadata is a primary means of clarifying expectations.

1.4.5. Data Quality ISO Standard

1. ISO 8000-100

Introduction

2. ISO 8000-110

focused on the syntax, semantic encoding, and conformance to the data specification of Master Data

3. ISO 8000-120

Provenance 来源

4. ISO 8000-130

Accuracy

5. ISO 8000-140

Completeness

6. ISO 8000-150

Data Quality Management Architecture

1.4.6. Data Quality Improvement Lifecycle

This work is often done in conjunction with Data Stewards and other stakeholders.

The Shewhart Chart / Deming cycle 休哈特/戴明环

Plan

the Data Quality team assesses the scope, impact, and priority of known issues, and evaluates alternatives to address them.

Do

the DQ team leads efforts to address the root causes of issues and plan for ongoing monitoring of data. For root causes that are based on non-technical processes, the DQ team can work with process owners to implement changes. For root causes that require technical changes, the DQ team should work with technical teams to ensure that requirements are implemented correctly and that technical changes do not introduce errors.

Check

involves actively monitoring the quality of data as measured against requirements.

Act

for activities to address and resolve emerging data quality issues.

Continuous improvement is achieved by starting a new cycle. New cycles begin as:

1. Existing measurements fall below thresholds

2. New data sets come under investigation

3. New data quality requirements emerge for existing data sets

4. Business rules, standards, or expectations change

5. 28. when a data quality team has more issues than they can manage they should look to: A:hire more people B:implement data validation rules on data entry systems C:initiate data quality improvement cycles,focusing on achieving incremental improvements D:delete any issue that is greater than 6 months old E:establish a program of quick wins targeting easy fixes over a short time period 正确答案:C 你的答案:C 解析:13.2.6:初步评估获得的知识为特定的数据质量提升目标奠定了基础。数据质量提升可以采取不同的形式,从简单的补救(如纠正记录中的错误)到根本原因的改进。补救和改进计划应考虑可以快速实现的问题(可以立即以低成本解决问题)和长期的战略性变化。

5. The acronym PDCA stands for what quality improvement process? A:Precision-Data-Control-Accuracy B:Process-Definition-Clarity-Accessibility C:Project-Derivation-Completeness-Action D:Pan-Do-Check-Act E:Plan-Deploy-Check-Act 正确答案:D 你的答案:D 解析:13.1.3

20. Which of the following is NOT a stage in the Shewhart/Deming Cycle that drives the data quality improvement lifecycle A:Plan B:Check C:Do D:Investigate E:Act 正确答案:D 你的答案:D 解析:暂无解析

The cost of getting data right the first time is cheaper than the costs from getting data wrong and fixing it later.

Building quality into the data management processes from the beginning costs less than retrofitting it.

Maintaining high quality data throughout the data lifecycle is less risky than trying to improve quality in an existing process. It also creates a far lower impact on the organization.

Establishing criteria for data quality at the beginning of a process or system build is one sign of a mature Data Management Organization.

1.4.7. Data Quality Business Rule Types

Business rules describe how business should operate internally, in order to be successful and compliant with the outside world.

Data Quality Business Rules describe how data should exist in order to be useful and usable within an organization.

Some common simple business rule types are:

1. Definitional conformance: 定义一致性

Confirm that the same understanding of data definitions is implementedand used properly in processes across the organization.

7. To continually improve the quality of data and information an organization must consider which one of the following? A:The clarity and shared acceptance of data definitions. B:Maximize the effective use and value of data and information assets C:Control the cost of data management. D:Understand the information needs of the enterprise and its stakeholders E:All 正确答案:A 你的答案:D 解析:13.1.3:业务规则通常在软件中实现,或者使用文档模板输入数据。一些简单常见的业务规则类型有:1)定义一致性。确认对数据定义的理解相同,并在整个组织过程中得到实现和正确使用;确认包括对计算字段内任意时间或包含局部约束的算法协议,以及汇总和状态相互依赖规则。

2. Value presence and record completeness: 价值存在和记录完备

Rules defining the conditions under which missing valuesare acceptable or unacceptable.

3. Format compliance:

One or more patterns specify values assigned to a data element, such asstandards for formatting telephone numbers.

4. Value domain membership: 值域匹配

Specify that a data element’s assigned value is included in thoseenumerated in a defined data value domain

5. Range conformance: 范围一致性

A data element assigned value must be within a defined numeric, lexicographic,or time range, such as greater than 0 and less than 100 for a numeric range.

6. Mapping conformance:

Indicating that the value assigned to a data element must correspond to oneselected from a value domain that maps to other equivalent corresponding value domain(s).

7. Consistency rules:

Conditional assertions that refer to maintaining a relationship between two (ormore) attributes based on the actual values of those attributes.

8. Accuracy verification:

Compare a data value against a corresponding value in a system of record orother verified source

9. Uniqueness verification:

Rules that specify which entities must have a unique representation andwhether one and only one record exists for each represented real world object.

10. Timeliness validation:

Rules that indicate the characteristics associated with expectations foraccessibility and availability of data.

1.4.8. Common Causes of Data Quality Issues 数据质量问题的常见原因

1. Issues Caused by Lack of Leadership 缺乏领导力导致的问题

Many governance and information asset programs are driven solely by compliance, rather than by the potential value to be derived from data as an asset. A lack of recognition on the part of leadership means a lack of commitment within an organization to managing data as an asset, including managing its quality (Evans and Price, 2012).

Barriers to effective management of data quality include:

Lack of awareness on the part of leadership and staff

Lack of business governance

Lack of leadership and management

Difficulty in justification of improvements

Inappropriate or ineffective instruments to measure value

These barriers have negative effects on customer experience, productivity, morale, organizational effectiveness, revenue, and competitive advantage. They increase costs of running the organization and introduce risks as well.

2. Issues Caused by Data Entry Processes 数据输入过程引起的问题

1. Data entry interface issues 数据输入接口:

Poorly designed data entry interfaces can contribute to data qualityissues. If a data entry interface does not have edits or controls to prevent incorrect data from being putin the system data processors are likely to take shortcuts, such as skipping non-mandatory fields andfailing to update defaulted fields.

2. List entry placement 列表条目放置:

Even simple features of data entry interfaces, such as the order of values withina drop-down list, can contribute to data entry errors.

3. Field overloading 字段重载:

Some organizations re-use fields over time for different business purposes ratherthan making changes to the data model and user interface. This practice results in inconsistent andconfusing population of the fields.

4. Training issues 培训问题:

Lack of process knowledge can lead to incorrect data entry, even if controls and editsare in place. If data processors are not aware of the impact of incorrect data or if they are incented forspeed, rather than accuracy, they are likely to make choices based on drivers other than the quality ofthe data.

5. Changes to business processes 业务流程的变更:

Business processes change over time, and with these changes newbusiness rules and data quality requirements are introduced. However, business rule changes are notalways incorporated into systems in a timely manner or comprehensively. Data errors will result if aninterface is not upgraded to accommodate new or changed requirements. In addition, data is likely tobe impacted unless changes to business rules are propagated throughout the entire system.

6. Inconsistent business process execution 业务流程执行混乱:

Data created through processes that are executedinconsistently is likely to be inconsistent. Inconsistent execution may be due to training ordocumentation issues as well as to changing requirements.

3. Issues Caused by Data Processing Functions

1. Incorrect assumptions about data sources 有关数据元的错误假设:

Production issues can occur due to errors or changes,inadequate or obsolete system documentation, or inadequate knowledge transfer (for example, whenSMEs leave without documenting their knowledge). System consolidation activities, such as thoseassociated with mergers and acquisitions, are often based on limited knowledge about the relationshipbetween systems.

2. Stale business rules 过时的业务规则:

Over time, business rules change. They should be periodically reviewed andupdated. If there is automated measurement of rules, the technical process for measuring rules shouldalso be updated.

3. Changed data structures 变更的数据结构:

Source systems may change structures without informing downstreamconsumers (both human and system) or without providing sufficient time to account for the changes.This can result in invalid values or other conditions that prevent data movement and loading, or inmore subtle changes that may not be detected immediately.

4. Issues Caused by System Design 系统设计引起的问题

1. Failure to enforce referential integrity: Referential integrity is necessary to ensure high quality dataat an application or system level. If referential integrity is not enforced or if validation is switched off(for example, to improve response times), various data quality issues can arise:

1. Duplicate data that breaks uniqueness rules

2. Orphan rows, which can be included in some reports and excluded from others, leading tomultiple values for the same calculation

3. Inability to upgrade due to restored or changed referential integrity requirements

4. Inaccurate data due to missing data being assigned default values

2. Failure to enforce uniqueness constraints: Multiple copies of data instances within a table or fileexpected to contain unique instances. If there are insufficient checks for uniqueness of instances, or ifthe unique constraints are turned off in the database to improve performance, data aggregation resultscan be overstated.

3. Coding inaccuracies and gaps: If the data mapping or layout is incorrect, or the rules for processingthe data are not accurate, the data processed will have data quality issues, ranging from incorrectcalculations to data being assigned to or linked to improper fields, keys, or relationships.

4. Data model inaccuracies: If assumptions within the data model are not supported by the actual data,there will be data quality issues ranging from data loss due to field lengths being exceeded by theactual data, to data being assigned to improper IDs or keys.

5. Field overloading: Re-use of fields over time for different purposes, rather than changing the datamodel or code can result in confusing sets of values, unclear meaning, and potentially, structuralproblems, like incorrectly assigned keys.

6. Temporal data mismatches: In the absence of a consolidated data dictionary, multiple systems couldimplement disparate date formats or timings, which in turn lead to data mismatch and data loss whendata synchronization takes place between different source systems.

7. Weak Master Data Management 主数据管理薄弱: Immature Master Data Management can lead to choosingunreliable sources for data, which can cause data quality issues that are very difficult to find until theassumption that the data source is accurate is disproved.

8. Data duplication 数据复制: Unnecessary data duplication is often a result of poor data management. There aretwo main types of undesirable duplication issues:

Single Source – Multiple Local Instances: For example, instances of the same customer inmultiple (similar or identical) tables in the same database. Knowing which instance is themost accurate for use can be difficult without system-specific knowledge.

Multiple Sources – Single Instance: Data instances with multiple authoritative sources orsystems of record. For example, single customer instances coming from multiple point-of-salesystems. When processing this data for use, there can be duplicate temporary storage areas.Merge rules determine which source has priority over others when processing into permanentproduction data areas.

34. One of the difficulties when integrating multiple source systems is A:maintaining documentation describing the data warehouse operation B:modifying the source systems to align to the enterprise data model C:determining valid links or equivalences between data elements D:completing the data architecture on time for the first release E:having a data quality rule applicable to all source systems 正确答案:C 你的答案:E 解析:13.1.3. ②多源-单一本地实例。具有多个权威来源或记录系统的数据实例。例如,来自多个销售点系统的单个客户实例。处理此类数据时,可能会产生重复的临时存储区域,当把其处理为永久性的生产数据区时,合并规则决定哪个“源”具有更高的优先级。

5. Issues Caused by Fixing Issues 解决问题引起的问题

Manual data patches are changes made directly on the data in the database, not through the business rules in the application interfaces or processing. These are scripts or manual commands generally created in a hurry and used to ‘fix’ data in an emergency such as intentional injection of bad data, lapse in security, internal fraud, or external source for business disruption

Like any untested code, they have a high risk of causing further errors through unintended consequences,

these shortcuts are strongly discouraged – they are opportunities for security breaches and business disruption longer than a proper correction would cause. All changes should go through a governed change management process.

1.4.9. Data Profiling

Data Profiling is a form of data analysis used to inspect data and assess quality. Data profiling uses statistical techniques to discover the true structure, content, and quality of a collection of data (Olson, 2003). A profiling engine produces statistics that analysts can use to identify patterns in data content and structure. For example:

1. Counts of nulls 空值数: Identifies nulls exist and allows for inspection of whether they are allowable or not

2. Max/Min value 最大最小值: Identifies outliers, like negatives

3. Max/Min length 最大最小长度: Identifies outliers or invalids for fields with specific length requirements

4. Frequency distribution of values for individual columns 单个列值的频率分布: Enables assessment of reasonability (e.g.,distribution of country codes for transactions, inspection of frequently or infrequently occurring values,as well as the percentage of the records populated with defaulted values)

5. Data type and format 数据类型和格式: Identifies level of non-conformance to format requirements, as well asidentification of unexpected formats (e.g., number of decimals, embedded spaces, sample values)

6. 38. In data integration, the goal of data discovery is to A:identify key users and perform high level assessment of data quality B:assign data glossary terms and data formats C:identify potential sources and assure data recovery processes are compliant. D:assign data glossary terms and canonical models E:identify potential sources and perform high-level assessment of data quality. 正确答案:E 你的答案:E 解析:13.1.3.:分析人员必须评估剖析引擎的结果,以确定数据是否符合规则和其他要求。一个好的分析人员可以使用分析结果确认已知的关系,并发现数据集内和数据集之间隐藏的特征和模式,包括业务规则和有效性约束。剖析通常被作为项目中数据发现的一部分(尤其是数据集成项目),或者用于评估待改进的数据的当前状态。数据剖析结果可用来识别那些可以提升数据和元数据质量的机会

1.4.10. Data Quality and Data Processing

1. While the focus of data quality improvement efforts is often on the prevention of errors, data quality can also be improved through some forms of data processing.

2. Data Cleansing 数据清理

Data Cleansing or Scrubbing transforms data to make it conform to data standards and domain rules. Cleansing includes detecting and correcting data errors to bring the quality of data to an acceptable level.

40. A Term transforms data to make it conform to data standards and domain rules this term is called A:Data Profiling B:Data parsing C:Data Modeling D:Data analysis E:Data Cleansing 正确答案:E 你的答案:E 解析:13.1.3(1)数据清理或数据清洗,可以通过数据转换使其符合数据标准和域规则.清理包括检测和纠正数据错误,使数据质量达到可接受的水平。

It costs money and introduces risk to continuously remediate data through cleansing

In some situations, correcting on an ongoing basis may be necessary, as re-processing the data in a midstream system is cheaper than any other alternative.

3. Data Enhancement 数据增强

Data enhancement or enrichment is the process of adding attributes to a data set to increase its quality and usability.

39. A Term is the process of adding attributes to a data set to increase its quality and usability. this term is called A:Data Profiling B:Data parsing C:Data Modeling D:Data analysis E:Data enhancement 正确答案:E 你的答案:E 解析:13.1.3.:(2)数据增强数据增强或丰富是给数据集添加属性以提高其质量和可用性的过程。

Some enhancements are gained by integrating data sets internal to an organization. External data can also be purchased to enhance organizational data

Time/Date stamps 时间戳:

One way to improve data is to document the time and date that data items arecreated, modified, or retired, which can help to track historical data events. If issues are detected withthe data, timestamps can be very valuable in root cause analysis, because they enable analysts to isolatethe timeframe of the issue.

Audit data 审计数据:

Auditing can document data lineage, which is important for historical tracking as well asvalidation.

Reference vocabularies参考词汇表:

Business specific terminology, ontologies, and glossaries enhanceunderstanding and control while bringing customized business context.

Contextual information 语境信息:

Adding context such as location, environment, or access methods andtagging data for review and analysis.

Geographic information 地理信息:

Geographic information can be enhanced through address standardizationand geocoding, which includes regional coding, municipality, neighborhood mapping, latitude /longitude pairs, or other kinds of location-based data.

Demographic information 人口统计信息:

Customer data can be enhanced through demographic information, suchas age, marital status, gender, income, or ethnic coding. Business entity data can be associated withannual revenue, number of employees, size of occupied space, etc.

Psychographic information 心理信息:

Data used to segment the target populations by specific behaviors,habits, or preferences, such as product and brand preferences, organization memberships, leisureactivities, commuting transportation style, shopping time preferences, etc.

Valuation information 评估信息:

Use this kind of enhancement for asset valuation, inventory, and sale.

4. Data Parsing and Formatting 数据解析和格式化

Data Parsing is the process of analyzing data using pre-determined rules to define its content or value.

5. Data Transformation and Standardization 数据转换与标准化

During normal processing, data rules trigger and transform the data into a format that is readable by the target architecture.

2. Activities

2.1. Define High Quality Data

2.1.1. Ask a set of questions to understand current state and assess organizational readiness for data quality improvement:

1. What do stakeholders mean by ‘high quality data’?

2. What is the impact of low quality data on business operations and strategy?

3. How will higher quality data enable business strategy?

4. What priorities drive the need for data quality improvement?

5. What is the tolerance for poor quality data?

6. What governance is in place to support data quality improvement?

7. What additional governance structures will be needed?

2.1.2. Getting a comprehensive picture of the current state of data quality in an organization requires approaching the question from different perspectives:

1. An understanding of business strategy and goals

2. Interviews with stakeholders to identify pain points, risks, and business drivers

3. Direct assessment of data, through profiling and other form of analysis

4. Documentation of data dependencies in business processes

5. Documentation of technical architecture and systems support for business processes

2.2. Define a Data Quality Strategy

2.2.1. Data quality priorities must align with business strategy. Adopting or developing a framework and methodology will help guide both strategy and tactics while providing a means to measure progress and impacts.

2.2.2. A framework should include methods to

1. Understand and prioritize business needs

2. Identify the data critical to meeting business needs

3. Define business rules and data quality standards based on business requirements

4. Assess data against expectations

5. Share findings and get feedback from stakeholders

6. Prioritize and manage issues

7. Identify and prioritize opportunities for improvement

8. Measure, monitor, and report on data quality

9. Manage Metadata produced through data quality processes

10. Integrate data quality controls into business and technical processes

2.3. Identify Critical Data and Business Rules

2.3.1. Not all data is of equal importance. Data Quality Management efforts should focus first on the most important data in the organization:

2.3.2. Data can be prioritized based on factors such as regulatory requirements, financial value, and direct impact on customers.

2.3.3. Often, data quality improvement efforts start with Master Data, which is, by definition, among the most important data in any organization.

1. What quality process should be done first in order to measure information quality? A:Re-engineer data B:Cleanse data C:Assess data definitions D:Measure information costs E:database security 正确答案:C 你的答案:C 解析13.2活动13.2.1定义高质量数据在启动数据质量方案之前,有益的做法是了解业务需求、定义术语、识别组织的痛点,并开始就数据质量改进的驱动因素和优先事项达成共识。

2.3.4. Having identified the critical data, Data Quality analysts need to identify business rules that describe or imply expectations about the quality characteristics of data.

2.3.5. Defining data quality rules is challenging because most people are not used to thinking about data in terms of rules.

It may be necessary to get at the rules indirectly, by asking stakeholders about the input and output requirements of a business process

Keep in mind that it is not necessary to know all the rules in order to assess data. Discovery and refinement of rules is an ongoing process

One of the best ways to get at rules is to share results of assessments.

2.4. Perform an Initial Data Quality Assessment

2.4.1. The goal of an initial data quality assessment is to learn about the data in order to define an actionable plan for improvement. It is usually best to start with a small, focused effort – a basic proof of concept – to demonstrate how the improvement process works. Steps include:

1. Define the goals of the assessment; these will drive the work

2. Identify the data to be assessed; focus should be on a small data set, even a single data element, or aspecific data quality problem

3. Identify uses of the data and the consumers of the data

4. Identify known risks with the data to be assessed, including the potential impact of data issues onorganizational processes

5. Inspect the data based on known and proposed rules

6. Document levels of non-conformance and types of issues

7. Perform additional, in-depth analysis based on initial findings in order to

Quantify findings

Prioritize issues based on business impact

Develop hypotheses about root causes of data issues

8. Meet with Data Stewards, SMEs, and data consumers to confirm issues and priorities

9. Use findings as a foundation for planning

Remediation of issues, ideally at their root causes

Controls and process improvements to prevent issues from recurring

Ongoing controls and reporting

2.5. Identify and Prioritize Potential Improvements

2.5.1. Having proven that the improvement process can work, the next goal is to apply it strategically. Doing so requires identifying and prioritizing potential improvements.

2.5.2. Identification may be accomplished by full-scale data profiling of larger data sets to understand the breadth of existing issues.

2.5.3. Identification may be accomplished by full-scale data profiling of larger data sets to understand the breadth of existing issues.

2.6. Define Goals for Data Quality Improvement

2.6.1. When issues are found, determine ROI of fixes based on:

1. The criticality (importance ranking) of the data affected

2. Amount of data affected

3. The age of the data

4. Number and type of business processes impacted by the issue

5. Number of customers, clients, vendors, or employees impacted by the issue

6. Risks associated with the issue

7. Costs of remediating root causes

8. Costs of potential work-arounds

2.6.2. In assessing issues, especially those where root causes are identified and technical changes are required, always seek out opportunities to prevent issues from recurring.

2.6.3. Preventing issues generally costs less than correcting them – sometimes orders of magnitude less.

2.7. Develop and Deploy Data Quality Operations

2.7.1. Manage Data Quality Rules

data quality rules and standards are a critical form of Metadata. To be effective, they need to be managed as Metadata. Rules should be:

1. Documented consistently: 记录的一致性

Establish standards and templates for documenting rules so that they havea consistent format and meaning.

2. Defined in terms of Data Quality dimensions: 根据数据质量维度顶底

Consistent application of dimensions will help with the measurement and issuemanagement processes.

3. Tied to business impact: 与业务影响挂钩

While data quality dimensions enable understanding of common problems,they are not a goal in-and-of-themselves. Measurements that are not tied to business processes should not be taken.

4. Backed by data analysis: 数据分析支持

Data Quality Analysts should not guess at rules. Rules should be tested against actual data.

5. Confirmed by SMEs: 由领域专家确认

This knowledge comes when subject matter experts confirm or explain the results of data analysis.

6. Accessible to all data consumers: 所有数据消费者都可以访问

All data consumers should have access to documented rules. Ensure that consumers have a means to ask questions about and provide feedback on rules.

2.7.2. Measure and Monitor Data Quality

There are two equally important reasons to implement operational data quality measurements:

To inform data consumers about levels of quality

To manage risk that change may be introduced through changes to business or technical processes

4. A metric for data quality accuracy is A:percent correct. B:percent complete C:percent unique D:percent defined E:all 正确答案:A 你的答案:A 解析:13.2.7

Provide continuous monitoring by incorporating control and measurement processes into the information processing flow. Automated monitoring of conformance to data quality rules can be done in-stream or through a batch process. Measurements can be taken at three levels of granularity: the data element value 数据元素值, data instance or record 数据实例或记录, or the data set 数据集.

19. Data quality measurements can be taken at three levels of granularity. They are: A:Fine data, coarse data, and rough data B:Departmental data, regional data, and enterprise data C:Data element value, data instance or record and data set D:Person data,,location data, and product data E:Historical data current data and future dated data 正确答案:C 你的答案:C 解析:13.2.7题解:通过将控制和度量过程纳入信息处理流程进行持续的监控,可以通过流程或批处理的方式对数据质量规则的一致性进行自动监控,在三个粒度级别上进行度量:数据元素值、数据实例或记录、数据集。

2.7.3. Develop Operational Procedures for Managing Data Issues

1. Diagnosing issues 诊断问题

The objective is to review the symptoms of the data quality incident, trace the lineage of the data in question, identify the problem and where it originated, and pinpoint potential root causes of the problem.

The work of root cause analysis requires input from technical and business SMEs. success requires cross-functional

14. The objectives of a good issue resolution process include all of the following EXCEPT A:address problems, not symptoms B:identify who is causing the problem. C:recognize and respond when issued are identified D:provide a forum for issues to be raised E:all 正确答案:B 你的答案:D 解析:13.1.3:数据质量改进的常用方法如图13-3所示,是戴明环的一个版本。基于科学的方法,戴明环是一个被称为“计划-执行-检查-处理”的问题解决模型。改进是通过一组确定的步骤来实现的。必须根据标准测量数据状况,如果数据状况不符合标准,则必须确定并纠正与标准不符的根本原因。

2. Formulating options for remediation 制定补救方案:

1. Addressing non-technical root causes such as lack of training, lack of leadership support,unclear accountability and ownership, etc.

2. Modification of the systems to eliminate technical root causes

3. Developing controls to prevent the issue

4. Introducing additional inspection and monitoring

5. Directly correcting flawed data

6. Taking no action based on the cost and impact of correction versus the value of the datacorrection

3. Resolving issues 解决问题:

Having identified options for resolving the issue, the Data Quality team must confer with the business data owners to determine the best way to resolve the issue. These procedures should detail how the analysts:

1. Assess the relative costs and merits of the alternatives

2. Recommend one of the planned alternatives

3. Provide a plan for developing and implementing the resolution

4. Implement the resolution

4. To support effective tracking

1. Standardize data quality issues and activities: 标准化数据质量问题和活动

Since the terms used to describe data issues may varyacross lines of business, it is valuable to define a standard vocabulary for the concepts used.

2. Provide an assignment process for data issues: 提供数据问题的分配过程

Drive the assignment process within the incident tracking system by suggesting those individuals withspecific areas of expertise.

3. Manage issue escalation procedures 管理数据问题升级过程:

Data quality issue handling requires a well-defined system of escalation based on the impact, duration, or urgency of an issue.

4. Manage data quality resolution workflow: 管理数据质量解决方案工作流

The incident tracking system can support workflow management to track progress with issues diagnosis and resolution.

5. 31. which of the following is NOT required to effectively track data quality incidents A:A standard vocabulary for classifying data quality issues B:A well defined system of escalation based on the impact duration or urgency of an is C:An effective service level agreement with defined rewards and penalties D:An operational workflow that ensures effective resolution E:An assignment process to appropriate individuals and teams 正确答案:C 你的答案:A 解析:13.2.7.在问题管理过程中做出的决定应在事件跟踪系统中进行记录跟踪。如果这个跟踪系统得到良好的管理,它可以提供关于数据问题原因和成本的一些有价值的洞察,包括问题和根本原因的描述、补救方案以及如何解决该问题的决定。事件跟踪系统将收集与解决问题、分配工作、问题数量、发生频率,以及做出响应、给出诊断、计划解决方案和解决问题所需时间相关的性能数据。这些指标可以为当前工作流的有效性、系统和资源利用率提供有价值的洞察,它们是重要的管理数据点,可以推动数据质量控制进行持续的、具有可操作性的改进。

6. 32. The steps followed in managing data issues include A:Read, guess code release B:Standardization Allocation Assignment and correction C:Escalation,Review,Allocation and Completion D:Standardization,Assignment,Escalation, and Completion E:Standardization Explanation, ownership, and Completion 正确答案:D 你的答案:D 解析:13.2.7.在问题管理过程中做出的决定应在事件跟踪系统中进行记录跟踪。如果这个跟踪系统得到良好的管理,它可以提供关于数据问题原因和成本的一些有价值的洞察,包括问题和根本原因的描述、补救方案以及如何解决该问题的决定。事件跟踪系统将收集与解决问题、分配工作、问题数量、发生频率,以及做出响应、给出诊断、计划解决方案和解决问题所需时间相关的性能数据。这些指标可以为当前工作流的有效性、系统和资源利用率提供有价值的洞察,它们是重要的管理数据点,可以推动数据质量控制进行持续的、具有可操作性的改进。

2.7.4. Establish Data Quality Service Level Agreements

Operational data quality control defined in a data quality SLA includes:

1. Data elements covered by the agreement

2. Business impacts associated with data flaws

3. Data quality dimensions associated with each data element

4. Expectations for quality for each data element for each of the identified dimensions in each applicationor system in the data value chain

5. Methods for measuring against those expectations

6. Acceptability threshold for each measurement

7. Steward(s) to be notified in case the acceptability threshold is not met

8. Timelines and deadlines for expected resolution or remediation of the issue

9. Escalation strategy, and possible rewards and penalties

The data quality SLA also defines the roles and responsibilities associated with performance of operational data quality procedures.

37. A Data Quality Service Level Agreement (SLA) would normally include which of these? A:breakdown of the costs of data quality improvement B:An enterprise data model C:Detailed technical specifications for data transfer D:A Business Case for data improvement E:Respective roles responsibilities for data quality 正确答案:E 你的答案:E 解析:13.2.7:4.制定数据质量服务水平协议数据质量服务水平协议(SLA)规定了组织对每个系统中数螺质量问题进行响应和补救的期望。9)升级策略,以及可能的奖励和感罚,数据质量SLA还定义了与业务酸螺质量过程绩效相关的角色和职责

2.7.5. Develop Data Quality Reporting

1. Data quality scorecard, which provides a high-level view of the scores associated with various metrics,reported to different levels of the organization within established thresholds

13. The following are considerations for a good data quality scorecard for a data governance program EXCEPT A:data profiling aggregated metrics B:technical and non-technical metrics C:different views of the scorecard for difference audiences D:data profiling non-aggregated metrics E:all 正确答案:D 你的答案:C 解析:13.2.7. AD冲突,高级别视角需要聚合值。1)数据质量评分卡。可从高级别的视角提供与各种指标相关的分数,并在既定的闽值内向组织的不同层级报告。

2. Data quality trends, which show over time how the quality of data is measured, and whether trending isup or down

3. SLA Metrics, such as whether operational data quality staff diagnose and respond to data qualityincidents in a timely manner

4. Data quality issue management, which monitors the status of issues and resolutions

5. Conformance of the Data Quality team to governance policies

6. Conformance of IT and business teams to Data Quality policies

7. Positive effects of improvement projects

2.8. 22. which of these is NOT a typical activity in data Quality Management? A:Enterprise Data Modelling B:identifying data problems issues C:creating inspection monitoring processes D:Analyzing data quality E:Defining business requirements business rules 正确答案:A 你的答案:A 解析13.2活动13.2.1定义高质量数据13.2.2定义数据质量战略13.2.3识别关键数据和业务规则13.2.4执行初始数据质量评估13.2.5识别改进方向并确定优先排序13.2.6定义数据质量改进目标 13.2.7开发和部署数据质量操作

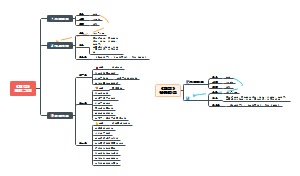

3. Tools

3.1. Data Profiling Tools

3.1.1. Data profiling tools produce high-level statistics that enable analysts to identify patterns in data and perform initial assessment of quality characteristics.

3.2. Data Querying Tools

3.2.1. Data Quality team members also need to query data more deeply to answer questions raised by profiling results and find patterns that provide insight into root causes of data issues.

3.3. Modeling and ETL Tools

3.3.1. The tools used to model data and create ETL processes have a direct impact on the quality of data.

3.4. Data Quality Rule Templates

3.4.1. Rule templates allow analyst to capture expectations for data. Templates also help bridge the communications gap between business and technical teams

3.5. Metadata Repositories

3.5.1. defining data quality requires Metadata and definitions of high quality data are a valuable kind of Metadata. DQ

4. Techniques

4.1. Preventive Actions 预防措施

4.1.1. The best way to create high quality data is to prevent poor quality data from entering an organization. Preventive actions stop known errors from occurring. Inspecting data after it is in production will not improve its quality.

4.1.2. Approaches include:

Establish data entry controls: 建立数据输入控制

Create data entry rules that prevent invalid or inaccurate data from entering a system.

15. The following are deliverables in a data governance program EXCEPT A:the metrics for data quality scorecard B:data input controls C:indexing of data management techniques D:meta-data standards. E:Data strategy 正确答案:B 你的答案:C 解析:13.4.1:B为数据质量需要考虑的。1)建立数据输入控制。创建数据输入规则,防止无效或不准确的数据进入系统。

Train data producers: 培训数据生产者

Ensure staff in upstream systems understand the impact of their data ondownstream users.

Define and enforce rules: 定义和执行规则

Create a ‘data firewall,’ which has a table with all the business data qualityrules used to check if the quality of data is good, before being used in an application such a datawarehouse.

Demand high quality data from data suppliers: 要求供应商提供高质量数据

Examine an external data provider’s processes tocheck their structures, definitions, and data source(s) and data provenance.

Implement Data Governance and Stewardship: 实施数据治理和管理制度

Ensure roles and responsibilities are defined thatdescribe and enforce rules of engagement, decision rights, and accountabilities for effectivemanagement of data and information assets (McGilvray, 2008).

Institute formal change control: 制定正式的变更控制

Ensure all changes to stored data are defined and tested before beingimplemented.

4.2. Corrective Actions 纠正措施

4.2.1. Data quality issues should be addressed systemically and at their root causes to minimize the costs and risks of corrective actions.

4.2.2. ‘Solve the problem where it happens’ 就地解决 is the best practice in Data Quality Management.

4.2.3. Perform data correction in three general ways:

Automated correction: 自动修正

Automated correction techniques include rule-based standardization,normalization, and correction. The modified values are obtained or generated and committed withoutmanual intervention.

Manually-directed correction: 人工检查修正

Use automated tools to remediate and correct data but require manualreview before committing the corrections to persistent storage.

21. what is Manual Directed Data Quality Correction? A:Teams of data correctors supervised by data subject matter experts B:the automation of all data cleanse and correction routines C:The use of spreadsheets to manually inspect and correct data D:Using a data quality improvement manual to guide data cleanse and correction activities E:The use of automated cleanse correction tools with results manually checked before committing outputs 正确答案:E 你的答案:D 解析:13.4.2. Manually-directed correction: 人工检查修正

Manual correction: 人工修正

Sometimes manual correction is the only option in the absence of tools orautomation or if it is determined that the change is better handled through human oversight.

4.3. Quality Check and Audit Code Modules

4.3.1. Create shareable, linkable, and re-usable code modules that execute repeated data quality checks and audit processes that developers can get from a library.

4.4. Effective Data Quality Metrics 有效的数据度量指标

4.4.1. A critical component of managing data quality is developing metrics that inform data consumers about quality characteristics that are important to their uses of data.

4.4.2. DQ analysts should account for these characteristics:

1. Measurability: 可度量性

A data quality metric must be measurable – it needs to be something that can be counted.

2. Business relevance: 业务相关性

While many things are measurable, not all translate into useful metrics. Measurements need to be relevant to data consumers.

3. Acceptability:

Determine whether data meets business expectations based on specified acceptability thresholds.

4. Accountability / Stewardship: 问责管理制度

Metrics should be understood and approved by key stakeholders The business data owner is accountable, while a datasteward takes appropriate corrective action.

5. Controllability:

A metric should reflect a controllable aspect of the business. In other words, if themetric is out of range, it should trigger action to improve the data. If there is no way to respond, thenthe metric is probably not useful.

6. Trending: 趋势分析

Metrics enable an organization to measure data quality improvement over time.

4.5. Statistical Process Control

4.5.1. Statistical Process Control (SPC) is a method to manage processes by analyzing measurements of variation in process inputs, outputs, or steps.

4.5.2. SPC is based on the assumption that when a process with consistent inputs is executed consistently, it will produce consistent outputs.

4.5.3. The primary tool used for SPC is the control chart (Figure 95), which is a time series graph that includes a central line for the average (the measure of central tendency) and depicts calculated upper and lower control limits (variability around a central value). In a stable process, measurement results outside the control limits indicate a special cause.

4.5.4. SPC measures the predictability of process outcomes by identifying variation within a process. Processes have variation of two types:

Common Causes that are inherent in the process 流程内部固有的常见原因

Special Causes that are unpredictable or intermittent. 不可预测或间歇性的特殊原因

4.5.5. SPC is used for control, detection, and improvement. The first step is to measure the process in order to identify and eliminate special causes. This activity establishes the control state of the process. Next is to put in place measurements to detect unexpected variation as soon as it is detectable.

4.6. Root Cause Analysis

4.6.1. A root cause of a problem is a factor that, if eliminated, would remove the problem itself. Root cause analysis is a process of understanding factors that contribute to problems and the ways they contribute. Its purpose is to identify underlying conditions that, if eliminated, would mean problems would disappear.

4.6.2. Common techniques for root cause analysis include Pareto analysis (the 80/20 rule), fishbone diagram analysis, track and trace, process analysis, and the Five Whys (McGilvray, 2008).

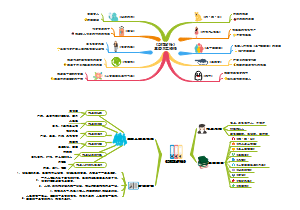

5. Implementation Guidelines

5.1. most Data Quality program implementations need to plan for:

5.1.1. Metrics on the value of data and the cost of poor quality data:

One way to raise organizationalawareness of the need for Data Quality Management is through metrics that describe the value of dataand the return on investment from improvements.

5.1.2. Operating model for IT/Business interactions:

Data Custodians from IT understand where and how the data is stored, and so they arewell placed to translate definitions of data quality into queries or code that identify specific records thatdo not comply.

12. Data quality stewardship begins by A:stating that data is poorly defined misinterpreted and inconsistently used B:proving that needed data can't be found in a timely C:creating awareness that data quality is a business issue that can't simply be dismissed as an lT problem D:showing the existence of and demonstrating inconsistent data sources on multiple databases with conflicting data E:none 正确答案:C 你的答案:C 解析:题解:有效的数据管理涉及一系列复杂的、相互关联的过程,它使组织能够利用他们的数据来实现其战略目标。数据管理能力包括为各类应用设计数据模型、安全存储和访问

5.1.3. Changes in how projects are executed:

Project oversight must ensure project funding includes stepsrelated to data quality It is prudent to makesure issues are identified early and to build data quality expectations upfront in projects.

5.1.4. Changes to business processes:

The Data Quality team needs to be able to assess and recommend changes to non-technical (as well as technical) processes that impact the quality of data.

5.1.5. Funding for remediation and improvement projects:

Data will not fix itself. The costsand benefits of remediation and improvement projects should be documented so that work onimproving data can be prioritized.

5.1.6. Funding for Data Quality Operations:

Sustaining data quality requires ongoing operations tomonit or data quality, report on findings, and continue to manage issues as they are discovered.

5.2. Readiness Assessment / Risk Assessment

5.2.1. Management commitment to managing data as a strategic asset

5.2.2. The organization’s current understanding of the quality of its data:

5.2.3. The actual state of the data: Finding an objective way to describe the condition of data that is causingpain points is the first step to improving the data.

5.2.4. Risks associated with data creation, processing, or use

5.2.5. Cultural and technical readiness for scalable data quality monitoring

5.3. Organization and Cultural Change

5.3.1. The quality of data will not be improved through a collection of tools and concepts, but through a mindset that helps employees and stakeholders to act while always thinking of the quality of data and what the business and their customers need.

5.3.2. All employees must act responsibly and raise data quality issues,Data quality is not just the responsibility of a DQ team or IT group.

5.3.3. Just as the employees need to understand the cost to acquire a new customer or retain an existing customer, they also need to know the organizational costs of poor quality data, as well as the conditions that cause data to be of poor quality.

5.3.4. employees need to think and act differently if they are to produce better quality data and manage data in ways that ensures quality. This requires training and reinforcement.

1. Common causes of data problems

2. Relationships within the organization’s data ecosystem and why improving data quality requires anenterprise approach

3. Consequences of poor quality data

4. Necessity for ongoing improvement (why improvement is not a one-time thing)

5. Becoming ‘data-lingual 数据语言化’, about to articulate the impact of data on organizational strategy and success,regulatory reporting, customer satisfaction

6. 9. Information quality training topics for business information/data stewards should include all of the following EXCEPT A:systems development principles. B:roles and responsibilities. C:data security and privacy D:information value chain E:All 正确答案:A 你的答案:A 解析:13.5.2:最终,如果要让员工生成更高质量的数据并以确保质量的方式管理数据,他们需要以不同的方式思考和行动,这需要培训和强化训练。培训应着重于:1)导致数据问题的常见原因。2)组织数据生态系统中的关系以及为什么提高数据质量需要全局方法。3)糟糕数据造成的后果。4)持续改进的必要性(为什么改进不是一次性的)。5)要“数据语言化”,阐述数据对组织战略与成功、监管报告和客户满意度的影响。培训还应包括对任何过程变更的介绍,以及有关变更如何提高数据质量的声明。

7. 11. Information quality training topics for business management and process owners should include all of the following EXCEPT A:policies and principles B:value chain C:relevance decay. D:responsibilities. E:Leadership 正确答案:C 你的答案:C 解析:C无关

6. Data Quality and Data Governance

6.1. Often data quality issues are the reason for establishing enterprise-wide data governance

6.1.1. Risk and security personnel who can help identify data-related organizational vulnerabilities

6.1.2. Business process engineering and training staff who can help teams implement process improvements

6.1.3. Business and operational data stewards, and data owners who can identify critical data, definestandards and quality expectations, and prioritize remediation of data issues

6.2. A Governance Organization can accelerate the work of a Data Quality program by:

1. Setting priorities

2. Identifying and coordinating access to those who should be involved in various data quality-relateddecisions and activities

3. Developing and maintaining standards for data quality

4. Reporting relevant measurements of enterprise-wide data quality

5. Providing guidance that facilitates staff involvement

6. Establishing communications mechanisms for knowledge-sharing

7. Developing and applying data quality and compliance policies

8. Monitoring and reporting on performance

9. Sharing data quality inspection results to build awareness, identify opportunities for improvements,and build consensus for improvements

10. Resolving variations and conflicts; providing direction

6.3. Data Quality Policy 数据质量制度

6.3.1. Each policy should include:

1. Purpose, scope and applicability of the policy

2. Definitions of terms

3. Responsibilities of the Data Quality program

4. Responsibilities of other stakeholders

5. Reporting

6. Implementation of the policy, including links to risk, preventative measures, compliance, dataprotection, and data security

6.4. Metrics

1. High-level categories of data quality metrics include:

Return on Investment: Statements on cost of improvement efforts vs. the benefits of improved dataquality

Levels of quality: Measurements of the number and percentage of errors or requirement violationswithin a data set or across data sets

Data Quality trends: Quality improvement over time (i.e., a trend) against thresholds and targets, orquality incidents per period

Data issue management metrics:

Counts of issues by dimensions of data quality

Issues per business function and their statuses (resolved, outstanding, escalated)

Issue by priority and severity

Time to resolve issues

Conformance to service levels 服务一致性水平: Organizational units involved and responsible staff, projectinterventions for data quality assessments, overall process conformance

Data Quality plan rollout 数据质量计划示意图: As-is and roadmap for expansion

7. Works Cited / Recommended

7.1. 3. which of the following is NOT considered a possible data content defect type? A:Domain chaos B:Combining accurate data with inaccurate data C:Data defined with a lot of embedded meaning D:Duplicate occurrences E:all 正确答案:C 你的答案:D 解析:13.1.3:2013年,DAMAUK发布了一份白皮书,描述了数据质量的6个核心维度:1)完备性。存储数据量与潜在数据量的百分比。2)唯一性。在满足对象识别的基础上不应多次记录实体实例(事物)。3)及时性,数据从要求的时间点起代表现实的程度。4)有效性,如数据符合其定义的语法(格式、类型、范围),则数据有效。5)准确性。数据正确描述所描述的“真实世界“对象或事件的程度。6)一致性。比较事物多种表述与定义的差异。

7.2. 16. which of the following best defines the term disparate data? A:Data that is stored in a data warehouse B:Data that is mapped between two or more applications C:Data that is scattered among multiple databases D:Data that differs in kind,quality or character. E:none 正确答案:D 你的答案:D 解析:ABC是位置不同

7.3. 17. which of the following is NOT usually a feature of data quality improvement tools? A:Standardization B:Parsing C:Data modelling D:Data profiling E:Transformation 正确答案:C 你的答案:E 解析:来源:13.3 数据质量 工具

7.4. 25. The data Quality Management cycle has four stages. Three are plan monitor Act. What is the fourth stage? A:improve B:Deploy 部署 C:Prepare D:Reiterate 重申 E:Manage 正确答案:B 你的答案:A

7.5. 26. A data quality report assesses the coding of deposit transactions. The following variations in the coding are apparent: DEP, Dep, dep dEp . Which DMBOK knowledge area has been ignored? A:Data Governance B:Metadata Management C:Data Storage and Operation D:Data Quality E:Reference and master data 正确答案:D 你的答案:B

7.6. 27. which DMBOK knowledge area is most likely responsible for a high percentage of returned mail? A:Data integration and interoperability B:Reference and master data C:Data Quality D:Data Warehousing and Business Intelligence E:Metadata Management 正确答案:C 你的答案:A

7.7. 29. The first morning an attribute is approaching an established data quality threshold the following should take place A:Establish a set of activites to address the emerging data quality issue B:consider reviewing the threshold value C:Press the emergency button situated under your desk D:Check for erroneous data in the effected reports E:Notify the data owner and advise them to establish a team of experts to investigate 正确答案:E 你的答案:A 解析:暂无解析

7.8. 30. which of the following is not a preventative action for creating high quality data A:Train data producers B:Establish data entry controls C:Institute formal data change control D:Implement data governance and stewardship E:Automated correction algorithms capable of detecting and correcting errors 正确答案:E 你的答案:E 解析:E不是预防

7.9. 33. A report displaying birth date contains possible but incorrect values. What is a possible explanation? A:Birth date is populated from a single source system; where the date field is an offset value of 1601 B:Birth date is populated from two source systems; one of which stores marriage date in the birth date field C:Birth date is populated from two source systems; both of which record the birth date in the birth date field D:Birth date is populated from a single source system which does not contain birth date E:Birth date is populated from a single source system; which contains missing values 正确答案:C 你的答案:E

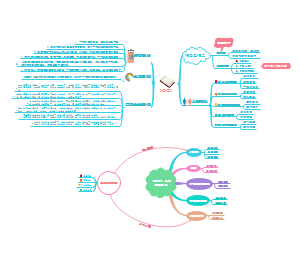

Chapter 14: Big Data and Data Science 大数据与数据科学

1. Introduction

1.1. Intro

1.1.1. Data Science has existed for a long time; it used to be called ‘applied statistics’. But the capability to explore data patterns has quickly evolved in the twenty-first century with the advent of Big Data and the technologies that support it.

1.1.2. Traditional Business Intelligence provides ‘rear-view mirror’ 后视镜 reporting – analysis of structured data to describe past trends. In some cases, BI patterns are used to predict future behavior, but not with high confidence.

1.1.3. As Big Data has been brought into data warehousing and Business Intelligence environments, Data Science techniques are used to provide a forward-looking (‘windshield’ 挡风玻璃) view of the organization. Predictive capabilities, real-time and model-based, using different types of data sources, offer organizations better insight into where they are heading.

1.1.4. Most data warehouses are based on relational models. Big Data is not generally organized in a relational model. Most data warehousing depends on the concept of ETL (Extract, Transform, and Load). Big Data solutions, like data lakes, depend on the concept of ELT – loading and then transforming.

1. Reference data appears in all these EXCEPT A:a data content management tag list B:a thesaurus 词典 C:ETL code D:a pick list 拣选清单 E:ELT code 正确答案:C 你的答案:E 解析:14.1:ETL结果中产生参考数据。然而,要想利用大数据,就必须改变数据的管理方式,大多数数据仓库都基于关系模型,而大数据一般不采用关系模型组织数据。大多数数据仓库依赖于ETL(提取、转换和加载)的概念。大数据解决方案,如数据湖,则依赖于ELT的概念——先加载后转换。

1.2. Business Drivers

1.2.1. The biggest business driver for developing organizational capabilities around Big Data and Data Science is the desire to find and act on business opportunities that may be discovered through data sets generated through a diversified range of processes

1.2.2. Big Data can stimulate innovation by making more and larger data sets available for exploration. This data can be used to define predictive models that anticipate customer needs and enable personalized presentation of products and services.

1.2.3. Data Science can improve operations. Machine learning algorithms can automate complex time-consuming activities, thus improving organizational efficiency, reducing costs, and mitigating risks.

1.3. Principles

1.3.1. because of the wide variation in sources and formats, Big Data management will require more discipline than relational data management

4. Big data management requires: A:more discipline than relational data management B:big ideas with big budgets C:less discipline than relational data management D:a certification in data science E:no discipline at all 正确答案:A 你的答案:C 解析:14:大数据不仅指数据的量大,也指数据的种类多(结构化的和非结构化的,文档、文件、音频、视频、流数据等),以及数据产生的速度快。那些从数据中探究、研发预测模型、机器学习模型、规范性模型和分析方法并将研发结果进行部署供相关方分析的人,被称为数据科学家。

1.3.2. Organizations should carefully manage Metadata related to Big Data sources in order to have an accurate inventory of data files, their origins, and their value.

1.4. Essential Concepts

1.4.1. Data Science 数据科学

Developing Data Science solutions involves the iterative inclusion of data sources into models that develop insights

Data Science depends on:

1. Rich data sources: 丰富的数据源

Data with the potential to show otherwise invisible patterns in organizational orcustomer behavior

2. Information alignment and analysis: 信息组织和分析

Techniques to understand data content and combine data sets tohypothesize and test meaningful patterns

3. Information delivery: 信息交付

Running models and mathematical algorithms against the data and producingvisualizations and other output to gain insight into behavior

4. Presentation of findings and data insights: 展示发现和数据洞察

Analysis and presentation of findings so that insights canbe shared

1.4.2. The Data Science Process 数据科学的过程

The Data Science process follows the scientific method of refining knowledge by making observations, formulating and testing hypotheses, observing results, and formulating general theories that explain results.

Within Data Science, this process takes the form of observing data and creating and evaluating models of behavior:

1. Define Big Data strategy and business needs: Define the requirements that identify desired outcomeswith measurable tangible benefits.

2. Choose data sources: Identify gaps in the current data asset base and find data sources to fill thosegaps.

3. Acquire and ingest data sources: Obtain data sets and onboard them.

4. Develop Data Science hypotheses and methods: Explore data sources via profiling, visualization,mining, etc.; refine requirements. Define model algorithm inputs, types, or model hypotheses andmethods of analysis (i.e., groupings of data found by clustering, etc.).

5. Integrate and align data for analysis: Model feasibility depends in part on the quality of the sourcedata. Leverage trusted and credible sources. Apply appropriate data integration and cleansingtechniques to increase quality and usefulness of provisioned data sets.

6. Explore data using models: Apply statistical analysis and machine learning algorithms against theintegrated data. Validate, train, and over time, evolve the model. Training entails repeated runs of themodel against actual data to verify assumptions and make adjustments, such as identifying outliers.Through this process, requirements will be refined. Initial feasibility metrics guide evolution of themodel. New hypotheses may be introduced that require additional data sets and results of thisexploration will shape the future modeling and outputs (even changing the requirements).

7. Deploy and monitor: Those models that produce useful information can be deployed to production forongoing monitoring of value and effectiveness. Often Data Science projects turn into data warehousingprojects where more vigorous development processes are put in place (ETL, DQ, Master Data, etc.).

1.4.3. Big Data 大数据

Early efforts to define the meaning of Big Data characterized it in terms of the Three V’s: Volume, Velocity, Variety (Laney, 2001). As more organizations start to leverage the potential of Big Data, the list of V’s has expanded:

1. Volume 量大: Refers to the amount of data. Big Data often has thousands of entities or elements in billionsof records.

2. Velocity 更新频率大: Refers to the speed at which data is captured, generated, or shared. Big Data is oftengenerated and can also be distributed and even analyzed in real-time.