导图社区 DAMA DMBOK2.0全知识点总结(第7-9章 数据安全 数据集成和互操作 文件和内容管理)

- 543

- 2

- 1

- 举报

DAMA DMBOK2.0全知识点总结(第7-9章 数据安全 数据集成和互操作 文件和内容管理)

CDMP,全称Certified for Data Management Professional,即数据管理专业人士认证,由数据管理国际协会DAMA International建立,是一项涵盖学历教育、工作经验和专业知识考试在内的综合认证。 总结了CDMP英文考试的所有知识点,考点,以及历史真题。 适用于从事数据管理,数据治理,数字转型等方面的高级职业认证。 章节和知识点较多,因此分章节和完成时间分发。 1-3章 4-6章 7-9章 10-12章 13-17章 考证 CDMP 数据管理 DMBOK 数字化转型 DAMA 数字化 数据管理专家

编辑于2023-03-18 20:21:50 北京市- DAMA

- CDMP

- DMBOK

- 数据管理专家

- DAMA DMBOK2.0全知识点总结(第13-17章 数据质量 大数据和数据科学 数据管理成熟度评估 数据管理组织与角色期望 数据管理和组织变革管理)

CDMP,全称Certified for Data Management Professional,即数据管理专业人士认证,由数据管理国际协会DAMA International建立,是一项涵盖学历教育、工作经验和专业知识考试在内的综合认证。 总结了CDMP英文考试的所有知识点,考点,以及历史真题。 适用于从事数据管理,数据治理,数字转型等方面的高级职业认证。 章节和知识点较多,因此分章节和完成时间分发。 (第1-3章 数字管理 数字伦理 数字治理) (第4-6章 数据架构 数据建模和设计 数据存储和操作) (第7-9章 数据安全 数据集成和互操作 文件和内容管理) (第10-12章 参考数据和主数据 数据仓库和商务智能 元数据管理) (第13-17章 数据质量 大数据和数据科学 数据管理成熟度评估 数据管理组织与角色期望 数据管理和组织变革管理) 考证 CDMP 数据管理 DMBOK 数字化转型 DAMA 数字化 数据管理专家

- DAMA DMBOK2.0全知识点总结(第10-12章 参考数据和主数据 数据仓库和商务智能 元数据管理)

CDMP,全称Certified for Data Management Professional,即数据管理专业人士认证,由数据管理国际协会DAMA International建立,是一项涵盖学历教育、工作经验和专业知识考试在内的综合认证。 总结了CDMP英文考试的所有知识点,考点,以及历史真题。 适用于从事数据管理,数据治理,数字转型等方面的高级职业认证。 章节和知识点较多,因此分章节和完成时间分发。 (第1-3章 数字管理 数字伦理 数字治理) (第4-6章 数据架构 数据建模和设计 数据存储和操作) (第7-9章 数据安全 数据集成和互操作 文件和内容管理) (第10-12章 参考数据和主数据 数据仓库和商务智能 元数据管理) (第13-17章 数据质量 大数据和数据科学 数据管理成熟度评估 数据管理组织与角色期望 数据管理和组织变革管理) 考证 CDMP 数据管理 DMBOK 数字化转型 DAMA 数字化 数据管理专家

- DAMA DMBOK2.0全知识点总结(第7-9章 数据安全 数据集成和互操作 文件和内容管理)

CDMP,全称Certified for Data Management Professional,即数据管理专业人士认证,由数据管理国际协会DAMA International建立,是一项涵盖学历教育、工作经验和专业知识考试在内的综合认证。 总结了CDMP英文考试的所有知识点,考点,以及历史真题。 适用于从事数据管理,数据治理,数字转型等方面的高级职业认证。 章节和知识点较多,因此分章节和完成时间分发。 1-3章 4-6章 7-9章 10-12章 13-17章 考证 CDMP 数据管理 DMBOK 数字化转型 DAMA 数字化 数据管理专家

DAMA DMBOK2.0全知识点总结(第7-9章 数据安全 数据集成和互操作 文件和内容管理)

社区模板帮助中心,点此进入>>

- DAMA DMBOK2.0全知识点总结(第13-17章 数据质量 大数据和数据科学 数据管理成熟度评估 数据管理组织与角色期望 数据管理和组织变革管理)

CDMP,全称Certified for Data Management Professional,即数据管理专业人士认证,由数据管理国际协会DAMA International建立,是一项涵盖学历教育、工作经验和专业知识考试在内的综合认证。 总结了CDMP英文考试的所有知识点,考点,以及历史真题。 适用于从事数据管理,数据治理,数字转型等方面的高级职业认证。 章节和知识点较多,因此分章节和完成时间分发。 (第1-3章 数字管理 数字伦理 数字治理) (第4-6章 数据架构 数据建模和设计 数据存储和操作) (第7-9章 数据安全 数据集成和互操作 文件和内容管理) (第10-12章 参考数据和主数据 数据仓库和商务智能 元数据管理) (第13-17章 数据质量 大数据和数据科学 数据管理成熟度评估 数据管理组织与角色期望 数据管理和组织变革管理) 考证 CDMP 数据管理 DMBOK 数字化转型 DAMA 数字化 数据管理专家

- DAMA DMBOK2.0全知识点总结(第10-12章 参考数据和主数据 数据仓库和商务智能 元数据管理)

CDMP,全称Certified for Data Management Professional,即数据管理专业人士认证,由数据管理国际协会DAMA International建立,是一项涵盖学历教育、工作经验和专业知识考试在内的综合认证。 总结了CDMP英文考试的所有知识点,考点,以及历史真题。 适用于从事数据管理,数据治理,数字转型等方面的高级职业认证。 章节和知识点较多,因此分章节和完成时间分发。 (第1-3章 数字管理 数字伦理 数字治理) (第4-6章 数据架构 数据建模和设计 数据存储和操作) (第7-9章 数据安全 数据集成和互操作 文件和内容管理) (第10-12章 参考数据和主数据 数据仓库和商务智能 元数据管理) (第13-17章 数据质量 大数据和数据科学 数据管理成熟度评估 数据管理组织与角色期望 数据管理和组织变革管理) 考证 CDMP 数据管理 DMBOK 数字化转型 DAMA 数字化 数据管理专家

- DAMA DMBOK2.0全知识点总结(第7-9章 数据安全 数据集成和互操作 文件和内容管理)

CDMP,全称Certified for Data Management Professional,即数据管理专业人士认证,由数据管理国际协会DAMA International建立,是一项涵盖学历教育、工作经验和专业知识考试在内的综合认证。 总结了CDMP英文考试的所有知识点,考点,以及历史真题。 适用于从事数据管理,数据治理,数字转型等方面的高级职业认证。 章节和知识点较多,因此分章节和完成时间分发。 1-3章 4-6章 7-9章 10-12章 13-17章 考证 CDMP 数据管理 DMBOK 数字化转型 DAMA 数字化 数据管理专家

- 相似推荐

- 大纲

DAMA知识点 第7-9章

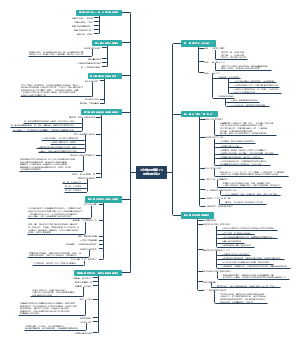

Chapter 7: Data Security 数据安全

1. Introduction

1.1. Definition:

1.1.1. Definition, planning, development, and execution of security policies and procedures to provide proper authentication 身份验证, authorization, access, and auditing of data and information assets.

31. which of these statements best defines data security management? A:The planning ,implementation , and testing of security technologies, authentication mechanisms,and other controls to prevent access to information B:The implementation and execution of checkpoints,checklists, controls,and technical mechanisms to govern the access to information in an enterprise C:None of these D:The planning,development, and execution of security policies and procedures to provide proper authentication, authorization, access, and auditing of data and information assets E:The definition of controls technical standards frameworks and audit trail capabilities to identify who has or has had access to information 正确答案:D 你的答案:D 解析:7.1.1. 数据安全包括安全策略和过程的规划、建立与执行,为数据和信息资产提供正确的身份验证、授权、访问和审计。

1.1.2. These requirements come from:

Stakeholders

Organizations must recognize the privacy and confidentiality needs of their stakeholders, including clients, patients, students, citizens, suppliers, or business partners.

38. stakeholders whose concerns must be addressed in data security management include A:External Standards organizations Regulators or the Media B:Media analysts, Internal Risk Management, Suppliers, or Regulators C:The Internal Audit and risk committees of the organization D:All of these E:Clients,Patients,Citizens,Suppliers, or Business Partners 正确答案:E 你的答案:E 解析:7.1:虽然数据安全的详细情况(如哪些数据需要保护)因行业和国家有所不同,但是数据安全实线的目标是相同的,即根据隐私和保密法规、合同协议和业务要求来保护信息资产,这些要求来自以下几个方面。(1)利益相关方应识别利益相关方的隐私和保密需求,包括客户、病人、学生、公民、供应商或商业伙伴等。组织中的每个人必须是对利益相关方数据负有责任的受托人。

Government regulations:

Government regulations are in place to protect the interests of somestakeholders.

Proprietary business concerns

Each organization has proprietary data to protect. An organization’sdata provides insight into its customers and, when leveraged effectively, can provide a competitiveadvantage.

Legitimate access needs

Business processes require individuals in certain roles be able to access, use, and maintain data.

Contractual obligations

Contractual and non-disclosure agreements also influence data security requirements.

1.1.3. Effective data security policies and procedures ensure that the right people can use and update data in the right way, and that all inappropriate access and update is restricted

39. which of these are characteristics of an effective data security policy A:None of these B:The procedures defined are benchmarked,supported by technology framework based and peer reviewed C:The defined procedures are tightly defined with rigid and effective enforcement sanctions 制裁, and alignment with technology capabilities D:The defined procedures ensure that the right people can use and update data in the right way, and that all inappropriate access and update is restricted E:The policies are specific,measurable,achievable,realistic,and technology aligned 正确答案:D 你的答案:D 解析:7.1:有效的数据安全策略和过程确保合法用户能以正确的方式使用和更新数据,并且限制所有不适当的访问和更新。了解并遵守所有利益相关方隐私、保密需求,符合每个组织的最高利益。客户、供应商和各相关方都信任并依赖数据的可靠使用。

1.2. Business Drivers

1.2.1. Risk reduction and business growth are the primary drivers of data security activities.

Data security risks are associated with regulatory compliance, fiduciary responsibility for the enterprise and stockholders, reputation, and a legal and moral responsibility to protect the private and sensitive information of employees, business partners, and customers.

Business growth includes attaining and sustaining operational business goals. Data security issues, breaches, and unwarranted restrictions on employee access to data can directly impact operational success.

1.2.2. The goals of mitigating risks and growing the business can be complementary and mutually supportive if they are integrated into a coherent strategy of information management and protection

1.2.3. Risk Reduction

As data regulations increase — usually in response to data thefts and breaches — so do compliance requirements.

As with other aspects of data management, it is best to address data security as an enterprise initiative.

Information security begins by classifying an organization’s data in order to identify which data requires protection.

Steps:

Identify and classify sensitive data assets:

Locate sensitive data throughout the enterprise

Determine how each asset needs to be protected

Identify how this information interacts with business processes:

In addition to classifying the data itself, it is necessary to assess external threats (such as those from hackers and criminals) and internal risks (posed by employees and processes).

1.2.4. Business Growth

Product and service quality relate to information security in a quite direct fashion: Robust 强健的 information security enables transactions and builds customer confidence.

1.2.5. Security as an Asset

One approach to managing sensitive data is via Metadata.

Security classifications and regulatory sensitivity can be captured at the data element and data set level. Technology exists to tag data so that Metadata travel with the information as it flows across the enterprise. Developing a master repository of data characteristics means all parts of the enterprise can know precisely what level of protection sensitive information requires.

Standard security Metadata can optimize data protection and guide business usage and technical support processes, leading to lower costs.

When sensitive data is correctly identified as such, organizations build trust with their customers and partners. Security-related Metadata itself becomes a strategic asset, increasing the quality of transactions, reporting, and business analysis, while reducing the cost of protection and associated risks that lost or stolen information cause.

1.2.6. 54. Primary drivers of data security activities are A:data quality and intellectual property protection B:risk reduction and business growth C:data protection and flexible database design D:risk control and content management. E:glossary management and risk reduction 正确答案:B 你的答案:B 解析:7.1.1业务驱动因素降低风险和促进业务增长是数据安全活动的主要驱动因素。确保组织数据安全,可降低风险并增加竞争优势。安全本身就是宝贵的资产。

1.3. Goals and Principles

1.3.1. Goals:

1. Enable appropriate, and prevent inappropriate, access to enterprise data assets.

2. Understand and comply with all relevant regulations and policies for privacy, protection, and confidentiality.

3. Ensure that the privacy and confidentiality needs of all stakeholders are enforced and audited.

36. A part from security requirements internal to the organization what other strategic goals should a Data security management system address? A:Ensuring the organization doesn't engage in SPAM marketing B:Regulatory requirements for privacy and confidentiality AND Privacy and Confidentiality needs of all stakeholders C:compliance with ISO27001 and HIPPA D:Compliance with ISO29100 and PCI-DSS E:None of these 正确答案:B 你的答案:B 解析:·7.1.2目标和原则1.目标数据安全活动目标,包括以下几个方面:1)支持适当访问并防止对企业数据资产的不当访问。2)支持对隐私、保护和保密制度、法规的遵从。3)确保满足利结相关方对隐私和保密的要求。

42. A security mechanism that searches for customer bank account details in outgoing emails is achieving the goal of A:ensuring stakeholder requirements for openness and transparency are met B:ensuring stakeholder requirements for service design and experience are met C:ensuring stakeholder requirements for confidentiality and privacy are met. D:ensuring stakeholder requirements for concise definitions and usage are met. E:ensuring stakeholder requirements for response time and availability levels are met. 正确答案:C 你的答案:C 解析:7.1.2:1.目标数据安全活动目标,包括以下几个方面:1)支持适当访问并防止对企业数据资产的不当访问。2)支持对隐私、保护和保密制度、法规的遵从。3)确保满足利益相关方对隐私和保密的要求。

53. The stakeholder requirements for privacy and confidentiality are goals found in: A:data quality. B:data security C:data architecture D:document and content management. E:metadata management. 正确答案:B 你的答案:B 解析:7.1.2:1.目标数据安全活动目标,包括以下几个方面:1)支持适当访问并防止对企业数据资产的不当访问。2)支持对隐私、保护和保密制度、法规的遵从。3)确保满足利益相关方对隐私和保密的要求。

1.3.2. Principles

1. Collaboration

Data Security is a collaborative effort involving IT security administrators, datastewards/data governance, internal and external audit teams, and the legal department.

2. Enterprise approach 企业统筹

Data Security standards and policies must be applied consistently across theentire organization.

3. Proactive management

Success in data security management depends on being proactive anddynamic, engaging all stakeholders, managing change, and overcoming organizational or culturalbottlenecks such as traditional separation of responsibilities between information security, informationtechnology, data administration, and business stakeholders.

4. Clear accountability

Roles and responsibilities must be clearly defined, including the ‘chain ofcustody’ for data across organizations and roles.

5. Metadata-driven

Security classification for data elements is an essential part of data definitions.

6. Reduce risk by reducing exposure 减少接触

Minimize sensitive/confidential data proliferation, especially tonon-production environments.

1.4. Essential Concepts

1.4.1. Vulnerability 脆弱性

A vulnerability is a weaknesses or defect in a system that allows it to be successfully attacked and compromised –essentially a hole in an organization’s defenses. Some vulnerabilities are called exploits 漏洞敞口

20. A weaknesses or defect of system that allows it to be successfully attacked and compromised This is called A:analysis B:replication C:archiving D:auditing E:vulnerability 正确答案:E 你的答案:E 解析: 7.1.3题解:脆弱性(Vulnerability)是系统中容易遭受攻击的弱点或缺陷,本质上是组织防御中的漏洞。某些脆弱性称为漏洞敞口。

43. A weakness or defect in a system that allows it to be successfully attacked and compromised A:vulnerability. B:feature C:chasm D:risk E:threat 正确答案:A 你的答案:A 解析:7.1.3.:1.脆弱性脆弱性(Vulnerability)是系统中容易遭受攻击的弱点或缺陷,本质上是组织防御中的漏洞。某些脆弱性称为漏洞敞口。例如,存在过期安全补丁的网络计

Examples include network computers with out-of-date security patches, web pages not protected with robust passwords, users not trained to ignore email attachments from unknown senders, or corporate software unprotected against technical commands that will give the attacker control of the system.

non-production environments are more vulnerable to threats than production environments

1.4.2. Threat 威胁

A threat is a potential offensive action that could be taken against an organization. Threats can be internal or external.

19. A ___ is a potential offensive action that could be taken against an organization A:analysis B:replication C:archiving D:auditing E:threat 正确答案:E 你的答案:E 解析:7.1.3:威胁(Threat)是一种可能对组织采取的潜在进攻行动。威胁包括发送到组织感染病毒的电子邮件附件、使网络服务器不堪重负以致无法执行业务(拒绝服务攻击)的进程,以及对已知漏洞的利用等。威胁可以是内部的,也可以是外部的。他们并不总是恶意的。

They are not always malicious. An uniformed insider can take offensive actions again the organization without even knowing it. Threats may relate to specific vulnerabilities, which then can be prioritized for remediation. Each threat should match to a capability that either prevents the threat or limits the damage it might cause. An occurrence of a threat is also called an attack surface. 攻击面

Examples of threats include virus-infected email attachments being sent to the organization, processes that overwhelm network servers and result in an inability to perform business transactions (also called denial-of-service attacks),

47. A denial of service attack 拒绝服务攻击 is typically accomplished by A:emailing virus laden attachments B:interrupting the mains electricity supply. C:corrupting the user name and password D:a stop-work action by the workforce E:flooding the target machine with superfluous requests 正确答案:E 你的答案:E 解析:7.1.3:2.威胁威胁(Threat)是一种可能对组织采取的潜在进攻行动。威胁包括发送到组织感染病毒的电子邮件附件、使网络服务器不堪重负以致无法执行业务(拒绝服务攻击)的进程,以及对已知漏洞的利用等。威胁可以是内部的,也可以是外部的。

1.4.3. Risk 风险

The term risk refers both to the possibility of loss and to the thing or condition that poses the potential loss.

17. The term refers both to the possibility of loss and to the thing or condition that poses the potential loss. This term is called A:analysis B:replication C:archiving D:auditing E:Risk 正确答案:E 你的答案:E 解析:7.1.3. 风险(Risk)既指损失的可能性,也指构成潜在损失的事物或条件。

calculated by following factors.

1. Probability that the threat will occur and its likely frequency

2. The type and amount of damage created each occurrence might cause, including damage to reputation

3. The effect damage will have on revenue or business operations

4. The cost to fix the damage after an occurrence

5. The cost to prevent the threat, including by remediation of vulnerabilities

6. The goal or intent of the probable attacker

7. Risks can be prioritized by potential severity of damage 损害程度 to the company, or by likelihood of occurrence 发生可能性,

Risks can be prioritized by potential severity of damage to the company, or by likelihood of occurrence, with easily exploited vulnerabilities creating a higher likelihood of occurrence. Often a priority list combines both metrics. Prioritization of risk must be a formal process among the stakeholders.

1.4.4. Risk Classifications 风险分类

Risk classifications describe the sensitivity of the data and the likelihood that it might be sought after for malicious purposes.

The highest security classification of any datum within a user entitlement determines the security classification of the entire aggregation.

17. Documents and records should be classified based on the level of confidentiality for information found in the record A:Average B:Highest C:overall D:General E:General 正确答案:B 你的答案:B 解析:9.2.2:(3)处理敏感数据组织有义务通过识别和保护敏感数据来保护隐私。数据安全或数据治理通常会建立保密方案,并确定哪些资产是机密的或受限制的。制作或拼装内容的人必须要应用这些分类。必须根据制度和法律要求将文件、网页和其他内容组件标记为是否敏感。一旦被标记为敏感,机密数据要么被屏蔽,要么在适当的情况下被删除(参见第7章 7.1.3.用户权限内所有数据中的最高安全分类决定了整体的安全分类)。

Include

1. Critical Risk Data (CRD) 关键风险数据

Personal information aggressively sought for unauthorized use by bothinternal and external parties due to its high direct financial value. Compromise of CRD would not onlyharm individuals, but would result in financial harm to the company from significant penalties, costs toretain customers and employees, as well as harm to brand and reputation.

2. High Risk Data (HRD) 高风险数据

HRD is actively sought for unauthorized use due to its potential directfinancial value. HRD provides the company with a competitive edge. If compromised, it could exposethe company to financial harm through loss of opportunity. Loss of HRD can cause mistrust leading tothe loss of business and may result in legal exposure, regulatory fines and penalties, as well as damageto brand and reputation.

3. Moderate Risk Data (MRD) 中风险数据

Company information that has little tangible value to unauthorizedparties; however, the unauthorized use of this non-public information would likely have a negativeeffect on the company.

1.4.5. Data Security Organization 数据安全组织

Depending on the size of the enterprise, the overall Information Security function may be the primary responsibility of a dedicated Information Security group, usually within the Information Technology (IT) area. Larger enterprises often have a Chief Information Security Officer (CISO) who reports to either the CIO or the CEO. In organizations without dedicated Information Security personnel, responsibility for data security will fall on data managers.

In all cases, data managers need to be involved in data security efforts.

1.4.6. Security Processes 数据安全过程

Data security requirements and procedures are categorized into four groups, known as the four A’s:

The Four A's

1. Access 访问

Enable individuals with authorization to access systems in a timely manner.

2. Audit 审计

Review security actions and user activity

Information security professionals periodically review logs and documents to validate compliance with security regulations, policies, and standards.Results of these audits are published periodically.

48. A staff member has been detected inappropriately accessing client records from usage logs. the security mechanism being used is an: A:entitlement B:audit. C:authorization D:access E:authentication 正确答案:B 你的答案:B 解析:7.1.3 2)审计(Audit),审查安金操作和用户活动,以确保符合法规和遵守公司制度和标准.信息安全专业人员金定期查看日志和文档,以脸证是否符合安全法规、策略和标准。这些审核的结果会定期发布。

3. Authentication 验证

Validate users’ access. When a user tries to log into a system, the system needs to verify that the person is who he or she claims to be. Passwords are one way of doing this.

16. Validate users' access. When a user tries to log into a system the system needs to verify that the person is who he or she claims to be. passwords are one way of doing this This is called A:analysis B:replication C:archiving D:Authentication E:threat 正确答案:D 你的答案:D 解析:7.1.3:3)验证(Authentication)。验证用户的访问权限。当用户试图登录到系统时,系统需要验证此人身份是否属实。除密码这种方式外,更严格的身份验证方法包括安全令牌、回答问题或提交指纹。

4. Authorization 授权

Grant individuals privileges to access specific views of data, appropriate to their role.

4. Authorization is the process of determining A:the identity of a user trying to access a network system or resource B:what information should be stored about users that occur on the firewall or network C:the capability that allows the receiver of an electronic message to prove who the send D:what type of data and functions an individual has access to within the enterprise E:none 正确答案:D 你的答案:D 解析: 7.1.3题解:4)授权(Authorization)。授予个人访问与其角色相适应的特定数据视图的权限。在获得授权后,访问控制系统在每次用户登录时都会检查授权令牌的有效性。从技术上讲,这是公司活动目录中数据字段中的一个条目,表示此人已获得授权访问数据。它进一步表明,用户凭借其工作或公司地位有权获得此权限,这些权限由相关负责人授予。

6. Protecting the data in a database is the function of management A:privacy B:redundancy C:authorization D:transaction E:None 正确答案:C 你的答案:A 解析:7.1.3:C授权.4)授权(Authorization)。授予个人访问与其角色相适应的特定数据视图的权限。在获得授权后,访问控制系统在每次用户登录时都会检查授权令牌的有效性。从技术上讲,这是公司活动目录中数据字段中的一个条目,表示此人已获得授权访问数据。它进一步表明,用户凭借其工作或公司地位有权获得此权限,这些权限由相关负责人授予。

15. Grant individuals privileges to access specific views of data appropriate to their role this is called A:analysis B:replication C:archiving D:Authorization E:threat 正确答案:D 你的答案:D 解析:7.1.3:C授权.4)授权(Authorization)。授予个人访问与其角色相适应的特定数据视图的权限。在获得授权后,访问控制系统在每次用户登录时都会检查授权令牌的有效性。从技术上讲,这是公司活动目录中数据字段中的一个条目,表示此人已获得授权访问数据。它进一步表明,用户凭借其工作或公司地位有权获得此权限,这些权限由相关负责人授予。

5. Entitlement 权限

An Entitlement is the sum total of all the data elements that are exposed to a user by asingle access authorization decision

6. 51. In data security ,which of the following is not one of the four A's A:Audit B:Authentication C:Authorization D:Agile E:Access 正确答案:D 你的答案:D 解析: 7.1.3题解:(1)4A1)访问(Access)2)审计 3 验证(Authentication)4)授权(Authorization).

Security Monitoring is also essential for proving the success of the other processes.

Monitoring

Systems should include monitoring controls that detect unexpected events, including potential security violations.

actively interrupt activities 主动中断访问活动

Some security systems will actively interrupt activities that do not follow specific access profiles. The account or activity remains locked until security support personnel evaluate the details.

passive monitoring by taking snapshots 被动定期快照

passive monitoring tracks changes over time by taking snapshots of the system at regular intervals, and comparing trends against a benchmark or other criteria.

1.4.7. Data Integrity 数据完整性

data integrity is the state of being whole – protected from improper alteration, deletion, or addition.

For example, in the U.S., Sarbanes-Oxley regulations are mostly concerned with protecting financial information integrity by identifying rules for how financial information can be created and edited.

1.4.8. Encryption 加密

Encryption is the process of translating plain text into complex codes to hide privileged information, verify complete transmission, or verify the sender’s identity.

44. The process of translating plain text into complex codes to hide privileged information is A:enhancement. B:exaggeration C:elimination D:encryption E:encapsulation 正确答案:D 你的答案:D 解析:7.1.3:8.加密(Encryption)是将施文本转换为复杂代稠,以隐藏特权信息、验证传送完整性或验证发送者身份的过程。加密数据不能在没有解密密钥或门法的情况下该取,解密密钥或门法通常单独存储,不能基于同一数据集中的其他数据元素来进行计算,加密方法主要有3种类型,即哈希,对称加密、非对称加密,其复杂程度和密钥结构各不相同。

Encrypted data cannot be read without the decryption key or algorithm, which is usually stored separately and cannot be calculated based on other data elements in the same data set.

hash 哈希

Hash encryption uses algorithms to convert data into a mathematical representation.

hashing is used as verification of transmission integrity or identity

Message Digest 5 (MD5)

Secure Hashing Algorithm (SHA).

symmetric 对称加密 private-key 私钥

Private-key encryption uses one key to encrypt the data.

Data Encryption Standard (DES)

Triple DES (3DES)

Advanced Encryption Standard (AES)

International Data Encryption Algorithm (IDEA)

Cyphers Twofish

Serpent

Asymmetric 非对称加密 public-key 公钥

In public-key encryption, the sender and the receiver have different keys. The sender uses a public key that is freely available, and the receiver uses a private key to reveal the original data.

Rivest-Shamir-Adelman (RSA) Key Exchange

Diffie-Hellman Key Agreement

PGP (Pretty Good Privacy) is a freely available application of public-key encryption.

1.4.9. Obfuscation or Masking 混淆和脱敏

Data can be made less available by obfuscation (making obscure or unclear) or masking, which removes, shuffles, or otherwise changes the appearance of the data, without losing the meaning of the data or the relationships the data has to other data sets, such as foreign key relationships to other objects or systems.

21. Obfuscation 混淆 or redaction 编校 of data is the practice of A:selling data B:making information available to the public C:reducing the size of large databases D:making information anonymous or removing sensitive information E:organizing data into meaningful groups 正确答案:D 你的答案:D 解析:7.1.3:9.混淆或脱敏可通过混处理(变得模糊或不明确)或脱敏(删除、打乱或以其他方式更改数据的外观等)的方式来降低数据可用性,同时避免丢失数据的含义或数据与其他数据集的关系。

52. Obfuscation of data is to A:put it in different databases B:make the result clear. C:collect data from obscure sources D:use synonyms for the same term E:make it obscure 晦涩难懂 or unclear. 正确答案:E 你的答案:E 解析:7.1.3:9.混淆或脱敏可通过混淆处理(变得模糊或不明确)或脱敏(删除、打乱或以其他方式更改数据的外观等)的方式来降低数据可用性,同时避免丢失数据的含义或数据与其他数据集的关系。

Obfuscation is useful when displaying sensitive information on screens for reference, or creating test data sets from production data that comply with expected application logic.

Data masking is a type of data-centric security. There are two types

1. Persistent Data Masking 静态数据脱敏

Persistent data masking permanently and irreversibly 不可逆 alters the data This type of masking is not typically used in production environments, but rather between a production environment and development or test environments.

In-flight persistent masking 不落地静态脱敏

occurs when the data is masked or obfuscated while it is movingbetween the source (typically production) and destination (typically non-production) environment. In-flight masking is very secure when properly executed because it does not leave an intermediate file ordatabase with unmasked data. Another benefit is that it is re-runnable if issues are encountered partway through the masking.

In-place persistent masking 落地静态脱敏

is used when the source and destination are the same. The unmasked datais read from the source, masked, and then used to overwrite the unmasked data. In-place masking assumes the sensitive data is in a location where it should not exist and the risk needs to be mitigated,or that there is an extra copy of the data in a secure location to mask before moving it to the non-securelocation.

There are risks to this process. If the masking process fails mid-masking, it can be difficult torestore the data to a useable format. This technique has a few niche uses, but in general, in-flight masking will more securely meet project needs

2. Dynamic Data Masking 动态数据脱敏

Dynamic data masking changes the appearance of the data to the end user or system without changing the underlying 基础的 data.

This can be extremely useful when users need access to some sensitive production data, but not all of it.

3. Masking Methods 脱敏方法

1. Substitution 替换

Replace characters or whole values with those in a lookup or as a standard pattern

2. Shuffling 混排

Swap data elements of the same type within a record, or swap data elements of one attributebetween rows. Temporal variance 时空变异

Move dates +/– a number of days – small enough to preserve trends, butsignificant enough to render them non-identifiable.

3. Value variance 数值变异

Apply a random factor +/– a percent, again small enough to preserve trends, butsignificant enough to be non-identifiable.

4. Nulling or deleting 空值或删除

Remove data that should not be present in a test system.

5. Randomization 随机选择

Replace part or all of data elements with either random characters or a series of a single character.

6. Encryption 加密技术

Convert a recognizably meaningful character stream to an unrecognizable characterstream by means of a cipher code.

7. Expression masking 表达式脱敏

Change all values to the result of an expression. For example, a simple expression would just hard code all values in a large free form database field

8. Key masking 键值脱敏

Designate that the result of the masking algorithm/process must be unique andrepeatable because it is being used mask a database key field (or similar).

1.4.10. Network Security Terms 网络安全术语

1. Backdoor 后门

A backdoor refers to an overlooked or hidden entry into a computer system or application.

Any backdoor is a security risk.

2. Bot or Zombie 机器人或僵尸

A bot (short for robot) or Zombie is a workstation that has been taken over by a malicious hacker using a Trojan, a Virus, a Phish, or a download of an infected file.

A Bot-Net is a network of robot computers (infected machines)

3. Cookie

A cookie is a small data file that a website installs on a computer’s hard drive, to identify returning visitors and profile their preferences.

Cookies are used for Internet commerce.

4. Firewall 防火墙

A firewall is software and/or hardware that filters network traffic to protect an individual computer or an entire network from unauthorized attempts to access or attack the system.

5. Perimeter 周界

A perimeter is the boundary between an organization’s environments and exterior systems. a firewall will be in place between all internal and external environments.

50. A term is the boundary between an organization's environments and exterior systems. This term is called A:analysis B:replication C:archiving D:perimeter E:threat 正确答案:D 你的答案:D 解析:7.1.3:(5)周界(Perimeter)是指组织环境与外部系统之间的边界。通常将防火墙部署在所有内部和外部环境之间。

6. DMZ 非军事区

a DMZ is an area on the edge or perimeter of an organization, with a firewall between it and the organization.

DMZ environments are used to pass or temporarily store data moving between organizations.

25. A term is an area on the edge or perimeter of an organization,with a firewall between it and the organization. This term is called A:analysis B:replication C:archiving D:DMZ E:threat 正确答案:D 你的答案:D 解析:7.1.3:(6)DMZ是非军事区(De-militarized Zone)的简称,指组织边缘或外围区域,在DMZ和组织之间设有防火墙。DMZ环境与Internet互联网之间始终设有防火墙。DMZ环境用于传递或临时存储在组织之间移动的数据。

46. A DMZ is bordered by 2 firewalls. These are between the DMZ and the: A:internet and extranet B:internet; for added security. C:internet and intranet. D:Korean peninsula E:internet and internal systems 正确答案:E 你的答案:C 解析:7.1.3:(6)DMZ是非军事区(De-militarized Zone)的简称,指组织边缘或外围区域。在DMZ和组织之间设有防火墙。DMZ环境与Internet互联网之间始终设有防火墙(图7-3)。DMZ环境用于传递或临时存储在组织之间移动的数据。

7. Super User Account 超级用户

A Super User Account is an account that has administrator or root access to a system to be used only in an emergency.

only released in an emergency with appropriate documentation and approvals, and expire within a short time.

24. A term is an account that has administrator or root access to a system to be used only in an emergency. This term is called A:analysis B:replication C:super User Account D:DMZ E:threat 正确答案:C 你的答案:C 解析:7.1.3:(7)超级用户账户超级用户(Super User)账户是具有系统管理员或超级用户访问权限的账户,仅在紧急情况下使用。这些账户的凭据保存要求具有高度安全性,只有在紧急情况下才能通过适当的文件和批准发布,并在短时间内到期。

8. Key Logger 键盘登录器

Key Loggers are a type of attack software that records all the keystrokes that a person types into their keyboard, then sends them elsewhere on the Internet.

Thus, every password, memo, formula, document, and web address is captured.

30. A term is a type of attack software that records all the keystrokes that a person types into their keyboard, then sends them else where on the Internet. This term is called A:analysis B:Key Loggers C:super User Account D:DMZ E:Threat 正确答案:B 你的答案:B 解析:7.1.3:(8)键盘记录器(Key Logger)是一种攻击软件,对键盘上键入的所有击键进行记录,然后发送到互联网上的其他地方。

9. Penetration Testing 渗透测试

In Penetration Testing (sometimes called ‘penn test’), an ethical hacker, either from the organization itself or hired from an external security firm, attempts to break into the system from outside, as would a malicious hacker, in order to identify system vulnerabilities.

10. Virtual Private Network (VPN) 虚拟专用网络

VPN connections use the unsecured internet to create a secure path or ‘tunnel’ into an organization’s environment

It allows communication between users and the internal network by using multiple authentication elements to connect with a firewall on the perimeter of an organization’s environment.

The tunnel is highly encrypted.

1.4.11. Types of Data Security 数据安全类型

1. ‘Least Privilege’ is an important security principle. A user, process, or program should be allowed to access only the information allowed by its legitimate purpose.

2. Facility Security 设施安全

Facility security is the first line of defense against bad actors.

3. Device Security 设备安全

Mobile devices often contain corporate emails,spreadsheets, addresses, and documents that, if exposed, can be damaging to the organization, its employees, or its customers.

Device security standards include:

1. Access policies regarding connections using mobile devices

2. Storage of data on portable devices such as laptops, DVDs, CDs, or USB drives

3. Data wiping and disposal of devices in compliance with records management policies

4. Installation of anti-malware and encryption software

5. Awareness of security vulnerabilities

4. Credential Security 凭证安全

Each user is assigned credentials to use when obtaining access to a system.

Identity Management Systems 身份管理系统

between the heterogeneous resources to ease user password management,usually when logging into the workstation, after which all authentication and authorization executes through a reference to the enterprise user directory. An identity management system implementing this capability is known as ‘single-sign-on’ 单点登录

User ID Standards for Email Systems 邮件系统的用户ID标准

User IDs should be unique within the email domain.

Password Standards 密码标准

Passwords are the first line of defense in protecting access to data.

Do not permit blank passwords.

Multiple Factor Identification 多因素识别

Some systems require additional identification procedures. These can include a return call to the user’s mobile device that contains a code, the use of a hardware item that must be used for login, or a biometric factor such as fingerprint, facial recognition, or retinal scan.

5. Electronic Communication Security 电子通信安全

These insecure methods of communication can be read or intercepted by outside sources

Social media also applies here. Blogs, portals, wikis, forums, and other Internet or Intranet social media should be considered insecure and should not contain confidential or restricted information.

1.4.12. Types of Data Security Restrictions 数据安全制约因素

Two concepts drive security restrictions

Confidentiality level 保密等级

Confidential means secret or private.

only on a ‘need-to-know’ basis

Regulation 监管要求

Regulatory categories are assigned based on external rules

shared on an ‘allowed-to-know’ basis

The main difference between confidential and regulatory restrictions is where the restriction originates: confidentiality restrictions originate internally, while regulatory restrictions are externally defined.

Another difference is that any data set, such as a document or a database view, can only have one confidentiality level. This level is established based on the most sensitive (and highest classified) item in the data set. however, are additive. A single data set may have data restricted based on multiple regulatory categories. To assure regulatory compliance, enforce all actions required for each category, along with the confidentiality requirements.

Confidential Data 机密数据

1. For general audiences 普通受众公开

Information available to anyone, including the public.

2. Internal use only 仅内部使用

Information limited to employees or members, but with minimal risk if shared. For internal use only; may be shown or discussed, but not copied, outside the organization.

3. Confidential 机密

Information that cannot be shared outside the organization without a properly executednon-disclosure agreement or similar in place. Client confidential information may not be shared withother clients.

4. Restricted confidential 受限机密

Information limited to individuals performing certain roles with the ‘need toknow.’ Restricted confidential may require individuals to qualify through clearance.

5. Registered confidential 绝密

Information so confidential that anyone accessing the information must sign a legal agreement to access the data and assume responsibility for its secrecy.

Regulated Data 监管限制的数据

1. Certain types of information are regulated by external laws, industry standards, or contracts that influence how data can be used, as well as who can access it and for what purposes. As there are many overlapping regulations, it is easier to collect them by subject area into a few regulatory categories or families to better inform data managers of regulatory requirements.

2. Each enterprise, of course, must develop regulatory categories that meet their own compliance needs. Further, it is important that this process and the categories be as simple as possible to allow for an actionable protection capability. When category protective actions are similar, they should be combined into a regulation ‘family’. Each regulatory category should include auditable protective actions. This is not an organizational tool but an enforcement method.

3. Sample Regulatory Families 法规系列举例

1. Personal Identification Information (PII) 个人身份信息

Also known as Personally Private Information (PPI)

EU PrivacyDirectives, Canadian Privacy law (PIPEDA), PIP Act 2003 in Japan, PCI standards, US FTCrequirements, GLB, FTC standards, and most Security Breach of Information Acts

2. Financially Sensitive Data 财务敏感数据

All financial information, including what may be termed ‘shareholder’ or‘insider’ data, including all current financial information that has not yet been reported publicly

SOX(Sarbanes-Oxley Act), or GLBA (Gramm-Leach-Bliley/Financial Services Modernization Act).

3. Medically Sensitive Data/Personal Health Information (PHI) 医疗敏感数据/个人健康信息

In the US, this is covered by HIPAA (Health Information Portability andAccountability Act).

4. Educational Records 教育记录

In the US, this is covered byFERPA (Family Educational Rights and Privacy Act).

4. Industry or Contract-based Regulation 行业法规或基于合同的法规

Payment Card Industry Data Security Standard (PCI-DSS) 支付卡行业数据安全标准

Competitive advantage or trade secrets 竞争优势和商业秘密

Contractual restrictions 合同限制

1.4.13. System Security Risks 系统安全风险

1. Abuse of Excessive Privilege 滥用特权

In granting access to data, the principle of least privilege should be applied. A user, process, or program should be allowed to access only the information allowed by its legitimate purpose. The risk is that users with privileges that exceed the requirements of their job function may abuse these privileges for malicious purpose or accidentally.

The DBA may not have the time or Metadata to define and update granular access privilege control mechanisms for each user entitlement

This lack of oversight to user entitlements is one reason why many data regulations specify data management security.

Query-level access control is useful for detecting excessive privilege abuse by malicious employees.

automated tools are usually necessary to make real query-level access control functional.

2. Abuse of Legitimate Privilege 滥用合法特权

Users may abuse legitimate database privileges for unauthorized purposes.

There are two risks to consider: intentional 故意 and unintentional 无意 abuse.

Intentional abuse occurs when an employee deliberately misuses organizational data.

Unintentional abuse is a more common risk: The diligent employee who retrieves and stores large amounts of patient information to a work machine for what he or she considers legitimate work purposes.

The partial solution to the abuse of legitimate privilege is database access control that not only applies to specific queries, but also enforces policies for end-point machines using time of day, location monitoring, and amount of information downloaded, and reduces the ability of any user to have unlimited access to all records containing sensitive information unless it is specifically demanded by their job and approved by their supervisor.

3. Unauthorized Privilege Elevation 未经授权的特权升级

Attackers may take advantage of database platform software vulnerabilities to convert access privileges from those of an ordinary user to those of an administrator. Vulnerabilities may occur in stored procedures, built-in functions, protocol implementations, and even SQL statements

Prevent privilege elevation exploits with a combination of traditional intrusion prevention systems (IPS) 入侵防护系统 and query-level access control intrusion prevention

4. Service Account or Shared Account Abuse 服务账户或共享账户滥用

Use of service accounts (batch IDs) and shared accounts (generic IDs) increases the risk of data security breaches and complicates the ability to trace the breach to its source. Some organizations further increase their risk when they configure monitoring systems to ignore any alerts related to these accounts. Information security managers should consider adopting tools to manage service accounts securely

Service Accounts 服务账户

Service accounts are convenient because they can tailor enhanced access for the processes that use them.

12. An application uses a single service account for all database access. One of the risks of this approach is A:the ability to trace who made changes to the data B:the data becomes out of order. C:the application freezes more often D:the database runs out of threads E:it constrains the application from running parallel processes 正确答案:A 你的答案:E 解析:同一账号无法追踪数据更改操

Restrict the use of service accounts to specific tasks or commands on specific systems, and require documentation and approval for distributing the credentials

Shared Accounts 共享账户

Shared accounts are created when an application cannot handle the number of user accounts needed or when adding specific users requires a large effort or incurs additional licensing costs

They should never be used by default.

5. Platform Intrusion Attacks 平台入侵攻击

Software updates and intrusion prevention protection of database assets requires a combination of regular software updates (patches) and the implementation of a dedicated Intrusion Prevention Systems (IPS). 入侵防御系统

An IPS is usually, but not always, implemented alongside of an Intrusion Detection System (IDS). 入侵检测系统

The most primitive form of intrusion protection is a firewall, but with mobile users, web access, and mobile computing equipment a part of most enterprise environments, a simple firewall, while still necessary, is no longer sufficient.

6. SQL Injection Vulnerability 注入漏洞

In a SQL injection attack, a perpetrator inserts (or ‘injects’) unauthorized database statements into a vulnerable SQL data channel, such as stored procedures and Web application input spaces. These injected SQL statements are passed to the database, where they are often executed as legitimate commands. Using SQL injection, attackers may gain unrestricted access to an entire database

Mitigate this risk by sanitizing all inputs before passing them back to the server.

7. Default Passwords 默认密码

It is a long-standing practice in the software industry to create default accounts during the installation of software packages. Some are used in the installation itself. Others provide users with a means to test the software out of the box.

Eliminating the default passwords is an important security step after every implementation.

8. Backup Data Abuse 备份数据滥用

Backups are made to reduce the risks associated with data loss, but backups also represent a security risk.

Encrypt all database backups. Encryption prevents loss of a backup either in tangible media or in electronic transit. Securely manage backup decryption keys. Keys must be available off-site to be useful for disaster recovery.

1.4.14. Hacking / Hacker 黑客行为和黑客

The term hacking came from an era when finding clever ways to perform some computer task was the goal.

29. A term came from an era when finding clever ways to perform some computer task was the goal. This term is called A:analysis B:Hacking C:super User Account D:DMZ E:threat 正确答案:B 你的答案:B 解析:7.1.3:14.黑客行为/黑客“黑客行为“一词产生于以寻找执行某些计算机任务的聪明方法为目标的时代。黑客是在复杂的计算机系统中发现未知操作和路径的人。黑客有好有坏。

An ethical or ‘White Hat’ hacker works to improve a system.

A malicious hacker is someone who intentionally breaches or ‘hacks’ into a computer system to steal confidential information or to cause damage.

1.4.15. Social Threats to Security / Phishing 社工威胁和网络钓鱼

Social engineering 社会工程 refers to how malicious hackers try to trick people into giving them either information or access.

49. A term refers to how malicious hackers try to trick people into giving them either information or access this term is called A:analysis B:Hacking C:social engineering D:DMZ E:threat 正确答案:C 你的答案:C 解析:7.1.3.:社会工程(Social Engineering)是指恶意黑客试图诱骗人们提供信息或访问信息的方法。黑客利用所获得的各种信息来说服有关员工他们有合法的请求。有时,黑客会按顺序联系几个人,在每一步收集信息以用于获得下一个更高级别员工的信任。

Phishing refers to a phone call, instant message, or email meant to lure recipients into giving out valuable or private information without realizing they are doing so.

1.4.16. Malware 恶意软件

Malware refers to any malicious software created to damage, change, or improperly access a computer or network.

28. A term refers to any malicious software created to damage change or improperly access a computer or network. This term is called A:Malware B:Hacking C:Social engineering D:DMZ E:threat 正确答案:A 你的答案:A 解析:7.1.3:16.恶意软件是指为损坏、更改或不当访问计算机或网络而创建的软件。

Adware 广告软件

Adware is not illegal, but is used to develop complete profiles

Adware is a form of spyware that enters a computer from an Internet download

27. A term is a form of spyware that enters a computer from an Internet download This term is called A:Adware B:Hacking C:social engineering D:DMZ E:threat 正确答案:A 你的答案:A 解析:7.1.3:1)广告软件(Adware)是一种从互联网下载至计算机的间课软件。广告软件监控计算机的使用,如访问了哪些网站。广告软件也可能在用户的浏览器中插入对象和工具栏。广告软件并不违法,但它用于收集完整的用户浏览和购买习惯的个人资料并出售给其他营销公司。恶意软件也很容易利用它来窃取身份信息。

Spyware 间谍软件

install tracking spyware, which is a form of Adware

Spyware refers to any software program that slips into a computer without consent, in order to track online activity.

26. A term refers to any software program that slips into a computer without consent,in order to rack online activity. This term is called A:Adware B:Spyware C:Social engineering D:DMZ E:threat 正确答案:B 你的答案:B 解析:7.1.3:2)间谍软件(Spyware)是指未经同意而潜入计算机以跟踪在线活动的任何软件程序。这些程序倾向于搭载在其他软件程序上,当用户从互联网站点下载并安装免费软件时,通常用户不知情时就安装了

Trojan Horse 特洛伊木马

a Trojan horse refers to a malicious program that enters a computer system disguised or embedded within legitimate software.

Virus 病毒

A virus is a program that attaches itself to an executable file or vulnerable application and delivers a payload that ranges from annoying to extremely destructive

14. A term is a program that attaches itself to an executable file or vulnerable application and delivers a payload that ranges from annoying to extremely destructive. This term is called A:Adware B:virus C:Social engineering D:DMZ E:threat 正确答案:B 你的答案:B 解析: 7.1.3题解:(4)病毒(Virus)是一种计算机程序,它将自身附加到可执行文件或易受攻击的应用程序上,能造成从让人讨厌到极具破坏性的后果。一旦受感染文件被打开就可执行病毒文件。病毒程序总是需要依附于另一个程序上。下载打开这些受感染的程序可能会释放病毒。

Worm 蠕虫

A computer worm is a program built to reproduce and spread across a network by itself

consuming large amounts of bandwidth,

34. A term is a program built to reproduce and spread across a network by itself. This term is called A:Adware B:computer worm C:social engineering D:DMZ E:threat 正确答案:B 你的答案:B 解析:7.1.3:(5)计算机蠕虫(Worm)是一种自己可以在网络中进行复制和传播的程序。受蠕虫感染的计算机将源源不断地发送感染信息。其主要功能是通过消耗大量带宽来危害网络,从而导致网络中断。蠕虫也可能会执行多种其他恶意的活动。

Malware Sources 恶意软件来源

1. Instant Messaging (IM) 即时消息

2. Social Networking Sites 社交网站

3. Spam 垃圾邮件

Domains known for spam transmission

CC: or BCC: address count above certain limits

Email body has only an image as a hyperlink

Specific text strings or words

33. A term refers to unsolicited 来历不明 commercial email messages sent out in bulk usually to tens of millions of users in hopes that a few may reply. this term is called A:Adware B:Spam C:Social engineering D:DMZ E:threat 正确答案:B 你的答案:B 解析7.1.3:3)垃圾邮件。垃圾邮件(Spam)是指批量发送那些未经请求的商业电子邮。通常发送给数干万用户,希望获得一些用户回复

2. Activities

2.1. Identify Data Security Requirements

2.1.1. Business Requirements 业务需求

Implementing data security within an enterprise begins with a thorough understanding of business requirements. The business needs of an enterprise, its mission, strategy and size, and the industry to which it belongs define the degree of rigidity required for data security.

Analyze business rules and processes to identify security touch points. Every event in the business workflow may have its own security requirements.

37. which of the following define the data security touch points in an organization? A:Industry standards wered B:Internal Audit C:Risk Assessment D:Legislation E:Business rules and process workflow 正确答案:E 你的答案:E 解析:7.2.1.:1.业务需求在组织内实施数据安全的第一步是全面了解组织的业务需求。组织的业务需求、使命、战略和规模以及所属行业,决定了所需数据安全的严格程度,例如,美国的金融证券行业受到高度监管,需要保持严格的数据安全标准。相比之下,一个小型零售企业可能不大会选择大型零售商的同类型数据安全功能,即使他们都具有相似的核心业务活动。通过分析业务规则和流程,确定安全接触点。业务工作流中的每个事件都可能有自己的安全需求。

Data-to-process and data-to-role relationship matrices are useful tools to map these needs and guide definition of data security role-groups, parameters, and permissions

2.1.2. Regulatory Requirements 监管需求

Today’s fast changing and global environment requires organizations to comply with a growing set of laws and regulations. The ethical and legal issues facing organizations in the Information Age are leading governments to establish new laws and standards. These have all imposed strict security controls on information management. Create a central inventory of all relevant data regulations and the data subject area affected by each regulation.

US

Sarbanes-Oxley Act of 2002

Health Information Technology for Economic and Clinical Health (HITECH) Act, enacted aspart of the American Recovery and Reinvestment Act of 2009

Health Insurance Portability and Accountability Act of 1996 (HIPAA) Security Regulations

Gramm-Leach-Bliley I and II

SEC laws and Corporate Information Security Accountability Act

Homeland Security Act and USA Patriot Act

Federal Information Security Management Act (FISMA)

California: SB 1386, California Security Breach Information Act

EU

Data Protection Directive (EU DPD 95/46/) AB 1901, Theft of electronic files or databases

Canada

Canadian Bill 198

Australia

The CLERP Act of Australia

Regulations that impact data security include

Payment Card Industry Data Security Standard (PCI DSS), in the form of a contractual agreement forall companies working with credit cards

EU: The Basel II Accord, which imposes information controls for all financial institutions doingbusiness in its related countries

US: FTC Standards for Safeguarding Customer Info

2.2. Define Data Security Policy 制定数据安全策略

2.2.1. Organizations should create data security policies based on business and regulatory requirements.

2.2.2. A policy is a statement of a selected course of action and high-level description of desired behavior to achieve a set of goals.

2.2.3. Security Policy Contents 安全政策的内容

Enterprise Security Policy

Global policies for employee access to facilities and other assets, emailstandards and policies, security access levels based on position or title, and security breach reportingpolicies

IT Security Policy

Directory structures standards, password policies, and an identity managementframework

Data Security Policy

Categories for individual application, database roles, user groups, andinformation sensitivity

Commonly, the IT Security Policy and Data Security Policy are part of a combined security policy. The preference, however, should be to separate them. Data security policies are more granular in nature, specific to content, and require different controls and procedures. The Data Governance Council should review and approve the Data Security Policy. The Data Management Executive owns and maintains the policy

32. Definition of data security policies should be A:Based on defined standards and templates B:A collaborative effort between Business and lT C:Reviewed by external Regulators D:Conducted by external consultants E:Determined by external Regulators 正确答案:B 你的答案:B 解析:正确答案:B来源:7.2.2题解:公司的制度通常具有法律含义。法院可认为,为支持法律监管要求而制定的制度是该组织为法律遵从而努力的内在组成部分。如发生数据泄露事件,未能遵守公司制度可能会带来负面的法律后果。制定安全制度需要IT安全管理员、安全架构师、数据治理委员会、数据管理专员、内部和外部审计团队以及法律部门之间的协作。数据管理专员还必须与所有隐私官(萨班斯-奥克斯利法案主管、HIPAA官员等)以及具有数据专业知识的业务经理协作,以开发监管类元数据并始终如一地应用适当的安全分类。所有数据法规遵从行动必须协调一致,以降低成本、工作指令混乱和不必要的本位之争。

Data security policies, procedures, and activities should be periodically reevaluated to strike the best possible balance between the data security requirements of all stakeholders

2.3. Define Data Security Standards 定义数据安全细则

2.3.1. Policies provide guidelines for behavior. They do not outline every possible contingency. Standards supplement policies and provide additional detail on how to meet the intention of the policies.

Define Data Confidentiality Levels 定义数据保密等级

Confidentiality classification is an important Metadata characteristic, guiding how users are granted access privileges.

Define Data Regulatory Categories 定义数据监管类别

A growing number of highly publicized data breaches, in which sensitive personal information has been compromised, have resulted in data-specific laws to being introduced.

Define Security Roles 定义安全角色

Role groups enable security administrators to define privileges by role and to grant these privileges by enrolling users in the appropriate role group.

There are two ways to define and organize roles: as a grid (starting from the data), or in a hierarchy (starting from the user).

Role Assignment Grid 角色分配矩阵

Role Assignment Hierarchy 角色分配层次结构

2.4. Assess Current Security Risks 评估当前安全风险

2.4.1. Security risks include elements that can compromise a network and/or database. The first step in identifying risk is identifying where sensitive data is stored, and what protections are required for that data. Evaluate each system for the following:

The sensitivity of the data stored or in transit

The requirements to protect that data, and

The current security protections in place

2.4.2. Document the findings, as they create a baseline for future evaluations

2.4.3. In larger organizations, white-hat hackers may be hired to assess vulnerabilities. A white hat exercise can be used as proof of an organization’s impenetrability, which can be used in publicity for market reputation.

2.5. Implement Controls and Procedures 实施控制和规程

2.5.1. Controls and procedures should (at a minimum) cover:

1. How users gain and lose access to systems and/or applications

2. How users are assigned to and removed from roles

3. How privilege levels are monitored

4. How requests for access changes are handled and monitored

5. How data is classified according to confidentiality and applicable regulations

6. How data breaches are handled once detected

2.5.2. a policy to ‘maintain appropriate user privileges’ could have a control objective of ‘Review DBA and User rights and privileges on a monthly basis’.

Validate assigned permissions against a change management system used for tracking all userpermission requests

Require a workflow approval process or signed paper form to record and document each changerequest

Include a procedure for eliminating authorizations for

2.5.3. Assign Confidentiality Levels 分配密级

The classification for documents and reports should be based on the highest level of confidentiality for any information found within the document.

Label each page or screen with the classification in the header or footer. Information products classified as least confidential

2.5.4. Assign Regulatory Categories 分配监管类别

Organizations should create or adopt a classification approach to ensure that they can meet the demands of regulatory compliance.

2.5.5. Manage and Maintain Data Security 管理和维护数据安全

Control Data Availability / Data-centric Security

An enterprise data model is essential to identifying and locating sensitive data.

Data masking can protect data even if it is inadvertently exposed

Relational database views can used to enforce data security levels

Monitor User Authentication and Access Behavior

Reporting on access is a basic requirement for compliance audits.

Monitoring also helps detect unusual, unforeseen, or suspicious transactions that warrant investigation.

It can be implemented within a system or across dependent heterogeneous systems.

Monitoring can be automated or executed manually or executed through a combination of automation and oversight.

Lack of automated monitoring represents serious risks:

1. Regulatory risk 监管风险

2. Detection and recovery risk 检测和恢复风险

3. Administrative and audit duties risk 管理和审计职责风险

4. Risk of reliance on inadequate native audit tools 依赖不适当的本地审计工具风险

implement a network-based audit appliance,has the following benefits

1. High performance 高性能

2. Separation of duties 职责分离

3. Granular transaction tracking 精细事务跟踪

2.5.6. Manage Security Policy Compliance 管理安全制度遵从性

Manage Regulatory Compliance 管理法规遵从性

1. Measuring compliance with authorization standards and procedures

2. Ensuring that all data requirements are measurable and therefore auditable (i.e., assertions like “becareful” are not measurable)

3. Ensuring regulated data in storage and in motion is protected using standard tools and processes

4. Using escalation procedures and notification mechanisms when potential non-compliance issues arediscovered, and in the event of a regulatory compliance breach

Audit Data Security and Compliance Activities 审计数据安全和合规活动

Internal audits of activities to ensure data security and regulatory compliance policies are followed should be conducted regularly and consistently.

Internal or external auditors may perform audits.

auditors must be independent of the data and / or process involved in the audit to avoid any conflict of interest and to ensure the integrity of the auditing activity and results.

Auditing is not a fault-finding mission. The goal of auditing is to provide management and the data governance council with objective, unbiased assessments, and rational, practical recommendations.

audits often include performing tests and checks, such as:

1. Analyzing policy and standards to assure that compliance controls are defined clearly and fulfillregulatory requirements

2. Analyzing implementation procedures and user-authorization practices to ensure compliance withregulatory goals, policies, standards, and desired outcomes

3. Assessing whether authorization standards and procedures are adequate and in alignment withtechnology requirements

4. Evaluating escalation procedures and notification mechanisms to be executed when potential non-compliance issues are discovered or in the event of a regulatory compliance breach

5. Reviewing contracts, data sharing agreements, and regulatory compliance obligations of outsourcedand external vendors, that ensure business partners meet their obligations and that the organizationmeets its legal obligations for protecting regulated data

6. Assessing the maturity of security practices within the organization and reporting to seniormanagement and other stakeholders on the ‘State of Regulatory Compliance’

7. Recommending Regulatory Compliance policy changes and operational compliance improvements

Auditing data security is not a substitute for management of data security. It is a supporting process that objectively assesses whether management is meeting goals.

3. Tools

3.1. Anti-Virus Software / Security Software

3.1.1. update security software regularly

3.2. HTTPS

3.3. Identity Management Technology

3.3.1. Lightweight Directory Access Protocol (LDAP) 轻量级目录访问协议

3.4. Intrusion Detection and Prevention Software 入侵侦测和入侵防御

3.4.1. IDS

3.4.2. IPS

3.5. Firewalls (Prevention)

3.6. Metadata Tracking

3.6.1. Tools that track Metadata can help an organization track the movement of sensitive data.

3.6.2. These tools create a risk that outside agents can detect internal information from metadata associated with documents. Identification of sensitive information using Metadata provides the best way to ensure that data is protected properly. Since the largest number of data loss incidents result from the lack of sensitive data protection due to ignorance of its sensitivity, Metadata documentation completely overshadows any hypothetical risk that might occur if the Metadata were to be somehow exposed from the Metadata repository. This risk is made more negligible since it is trivial for an experienced hacker to locate unprotected sensitive data on the network.

3.6.3. The people most likely unaware of the need to protect sensitive data appear to be employees and managers.

3.7. Data Masking/Encryption

4. Techniques

4.1. CRUD Matrix Usage

4.1.1. Creating and using data-to-process and data-to-role relationship (CRUD–Create, Read, Update, Delete) matrices help map data access needs and guide definition of data security role groups, parameters, and permissions. Some versions add an E for Execute to make CRUDE

1. You are engaged in a consulting position to advice a company on how best to understand how its data is used in the company by its applications as a best approach, you would recommend A:the development of an enterprise data model B:conducting an inventory of data C:the development of CRUD matrices for all application D:the development of a conceptual model E:the development of RACl matrices for all applications 正确答案:C 你的答案:C 解析: 7.4.1应用CRUD矩阵 创建和使用数据-流程矩阵和数据-角色关系(CRUD-创建、读取、更新、删除)矩阵有助于映射数据访问需求,并指导数据安全角色组、参数和权限的定义。某些版本中添加E(Execute)执行,以创建CRUDE矩阵。 负责、批注、咨询、通知(RACI)矩阵也有助于明确不同角色的角色、职责分离和职责,包括他们的数据安全义务。

35. A CRUD matrix helps organizations map responsibilities for data changes in the business process workflow. CRUD stands for A:Cost,Revenue,Uplift, Depreciate B:Create,Read,Update,Delete C:create,Review, Use,Destroy D:Create, React, Utilize, Delegate E:Confidential,Restricted,Unclassified, Destroy 正确答案:B 你的答案:B 解析:7.4.1应用CRUD矩阵创建和使用数据-流程矩阵和数据-角色关系(CRUD一创建、读取、更新、删除)矩阵有助于缺射数据访问需求,并指导数据安全角色组、参数和权限的定义,某些版本中添加E(Execute)执行,以创建CRUDE矩阵。

4.2. Immediate Security Patch Deployment 即时安全修复程序部署

4.2.1. A process for installing security patches as quickly as possible on all machines should be in place. A malicious hacker only needs root access to one machine in order to conduct his attack successfully on the network. Users should not be able to delay this update.

4.3. Data Security Attributes in Metadata 元数据中的数据安全属性

4.3.1. A Metadata repository is essential to assure the integrity and consistent use of an Enterprise Data Model across business processes. Metadata should include security and regulatory classifications for data.

4.4. Security Needs in Project Requirements 项目需求中的安全需求

4.4.1. Every project that involves data must address system and data security. Identify detailed data and application security requirements in the analysis phase. Identification up front guides the design and prevents having to retrofit security processes.

4.5. Efficient Search of Encrypted Data 高效搜索加密数据

4.5.1. Searching encrypted data obviously includes the need to decrypt the data.

4.5.2. encrypt the search criteria 用同样的密文先搜索再解密,比用明文搜索更快

4.6. Document Sanitization 文件清理

4.6.1. Document sanitization is the process of cleaning Metadata, such as tracked change history, from documents before sharing. Sanitization mitigates the risk of sharing confidential information that might be embedded in comments.

5. Implementation Guidelines

5.1. Readiness Assessment / Risk Assessment

5.1.1. Training 培训

Promotion of standards through training on security initiatives at all levels of theorganization.

5.1.2. Consistent policies 制度的一致性

Definition of data security policies and regulatory compliance policies forworkgroups and departments that complement and align with enterprise policies

5.1.3. Measure the benefits of security 衡量收益

Link data security benefits to organizational initiatives.

5.1.4. Set security requirements for vendors 为供应商设置安全要求,SLA

Include data security requirements in service levelagreements and outsourcing contractual obligations.

5.1.5. Build a sense of urgency 增强紧迫感

Emphasize legal, contractual, and regulatory requirements to build a senseof urgency and an internal framework for data security management.

5.1.6. Ongoing communications 持续沟通

Supporting a continual employee security-training program informingworkers of safe computing practices and current threats.

5.2. Organization and Cultural Change

5.2.1. Data Stewards are generally responsible for data categorization.

5.2.2. Information security teams assist with compliance enforcement and establish operational procedures based on data protection policies, and security and regulatory categorization.

5.3. Visibility into User Data Entitlement 用户数据授权的可见性

5.3.1. Each user data entitlement, which is the sum total of all the data made available by a single authorization, must be reviewed during system implementation to determine if it contains any regulated information

5.4. Data Security in an Outsourced World

5.4.1. Anything can be outsourced except liability.

5.4.2. Outsourcing IT operations introduces additional data security challenges and responsibilities. Outsourcing increases the number of people who share accountability for data across organizational and geographic boundaries. Previously informal roles and responsibilities must be explicitly defined as contractual obligations. Outsourcing contracts must specify the responsibilities and expectations of each role.

5.4.3. Any form of outsourcing increases risk to the organization, including some loss of control over the technical environment and the people working with the organization’s data. Data security measures and processes must look at the risk from the outsource vendor as both an external and internal risk.

5.4.4. The maturity of IT outsourcing has enabled organizations to re-look at outsourced services. A broad consensus has emerged that architecture and ownership of IT, which includes data security architecture, should be an in-sourced function. In other words, the internal organization owns and manages the enterprise and security architecture. The outsourced partner may take the responsibility for implementing the architecture

5.4.5. Transferring control, but not accountability, requires tighter risk management and control mechanisms. Some of these mechanisms include:

1. Service level agreements

2. Limited liability provisions in the outsourcing contract

3. Right-to-audit clauses in the contract

4. Clearly defined consequences 后果 to breaching 违反 contractual obligations 合同义务

5. Frequent data security reports from the service vendor

6. Independent monitoring of vendor system activity

7. Frequent and thorough 定期且彻底的 data security auditing

8. Constant communication with the service vendor

9. Awareness of legal differences in contract law should the vendor be located in another country and adispute arises

5.4.6. Outsourcing organizations especially benefit from developing CRUD (Create, Read, Update, and Delete) matrices that map data responsibilities across business processes, applications, roles, and organizations, tracing the transformation, lineage, and chain of custody for data

5.4.7. Responsible, Accountable, Consulted, and Informed (RACI) matrices also help clarify roles, the separation of duties, and responsibilities of different roles, including their data security obligations.

11. RACl matrices also help clarify roles,the separation of duties, and responsibilities of different roles,including their data security obligations. RAIC stands for A:Responsible,Answer,Consulted, and Inquiry B:Responsible,Accountable,Consulted and Informed C:Responsible,Accountable,Charge and lnformed D:Responsible,answer,Consulted and lnformed E:Responsible,accountable,Conscientious and lnformed 正确答案:B 你的答案:B 解析:7.5.4.负责、批注、咨询、通知(RACI)矩阵也有助于明确不同角色的角色、职责分离和职责,包括他们的数据安全义务。RACI矩阵可成为合同协议和数据安全制度的一部分。通过定义责任矩阵(如RACI)在参与外包的各方之间确立明确的问责制和所有权,从而支持总体数据安全制度及其实施。

40. A RACl matrix is a useful tool to support the ______ in an outsourced arrangement A:Segregation of duties SOD 职责隔离 B:Attributing Costs C:Transfer of access controls D:Alignment of Business goals E:Service level Agreement 正确答案:A 你的答案:E 解析:7.5.4:负责、批注、咨询、通知(RACI)矩阵也有助于明确不同角色的角色、职责分离和职责,包括他们的数据安全义务。

5.5. Data Security in Cloud Environments

5.5.1. Data security policies need to account for the distribution of data across the different service models. This includes the need to leverage external data security standards.

5.5.2. Shared responsibility, defining chain of custody 监管链 of data and defining ownership and custodianship 托管权 rights, is especially important in cloud computing.

5.5.3. Fine-tuning or even creating a new data security management policy geared towards cloud computing is necessary for organizations of all sizes.

5.5.4. The same data proliferation 数据扩散 security principles apply to sensitive/confidential production data.

6. Data Security Governance

6.1. Data Security and Enterprise Architecture

6.1.1. Enterprise Architecture defines the information assets and components of an enterprise, their interrelationships, and business rules regarding transformation, principles, and guidelines.

6.1.2. Data Security architecture is the component of enterprise architecture that describes how data security is implemented within the enterprise to satisfy the business rules and external regulations.

6.1.3. Architecture influences:

1. Tools used to manage data security

2. Data encryption standards and mechanisms

3. Access guidelines to external vendors and contractors

4. Data transmission protocols over the internet

5. Documentation requirements

6. Remote access standards

7. Security breach incident-reporting procedures

6.1.4. Security architecture is particularly important for the integration of data between:

1. Internal systems and business units

2. An organization and its external business partners

3. An organization and regulatory agencies

6.2. Metrics

6.2.1. Security Implementation Metrics 安全实施的指标

positive value percentages

1. Percentage of enterprise computers having the most recent security patches installed

2. Percentage of computers having up-to-date anti-malware software installed and running

3. Percentage of new-hires who have had successful background checks

4. Percentage of employees scoring more than 80% on annual security practices quiz

5. Percentage of business units for which a formal risk assessment analysis has been completed

6. Percentage of business processes successfully tested for disaster recovery in the event of fire,earthquake, storm, flood, explosion or other disaster

7. Percentage of audit findings that have been successfully resolved

Trends can be tracked

1. Performance metrics of all security systems

2. Background investigations and results

3. Contingency planning and business continuity plan status

4. Criminal incidents and investigations

5. Due diligence examinations for compliance, and number of findings that need to be addressed

7. An information security due care and due diligence activity should include which of the following? A:Steps can be verified,measured or produce tangible artifacts on a continual basis B:An incident response plan is created C:Leaders are accountable and staff is aware and trained D:Due care are steps are taken to show that company has taken responsibility E:all 正确答案:C 你的答案:C 解析:7.6.2:5)合规的尽职调查以及需要解决的调查结果数量。

6. Informational risk management analysis performed and number of those resulting in actionablechanges

7. Policy audit implications and results, such as clean desk policy checks, performed by evening-shiftsecurity officers during rounds

8. Security operations, physical security, and premises protection statistics

9. Number of documented, accessible security standards (a.k.a. policies)

10. The motivation of relevant parties to comply with security policies can also be measured

11. Business conduct and reputational risk analysis, including employee training

12. Business hygiene and insider risk potential based on specific types of data such as financial, medical,trade secrets, and insider information

13. Confidence and influence indicators among managers and employees as an indication of how datainformation security efforts and policies are perceived

6.2.2. Security Awareness Metrics 安全意识指标

Risk assessment findings

provide qualitative data that needs to be fed back to appropriate business units to make them more aware of their accountability.

Risk events and profiles

identify unmanaged exposures that need correction. Determine the absenceor degree of measurable improvement in risk exposure or conformance to policy by conducting follow-up testing of the awareness initiative to see how well the messages got across.

Formal feedback surveys and interviews

identify the level of security awareness. Also, measure the number of employees who have successfully completed security awareness training within targeted populations.

Incident post mortems, lessons learned, and victim interviews 受害者访谈

provide a rich source of informationon gaps in security awareness. Measures may include how much vulnerability has been mitigated.

Patching effectiveness audits 补丁有效性审计

involve specific machines that work with confidential and regulatedinformation to assess the effectiveness of security patching. (An automated patching system is advisedwhenever possible.)

6.2.3. Data Protection Metrics 数据保护的指标

1. Criticality ranking

of specific data types and information systems that, if made inoperable, would have profound impact on the enterprise.

2. Annualized loss expectancy

of mishaps, hacks, thefts, or disasters related to data loss, compromise, orcorruption.

3. Risk of specific data losses

related to certain categories of regulated information, and remediationpriority ranking.

4. Risk mapping of data to specific business processes.

Risks associated with Point of Sale devices would be included in the risk profile of the financial payment system.

5. Threat assessments

performed based on the likelihood of an attack against certain valuable data resources and the media through which they travel.

6. Vulnerability assessments

of specific parts of the business process where sensitive information couldbe exposed, either accidentally or intentionally.

6.2.4. Security Incident Metrics 安全事件指标

Intrusion attempts 入侵尝试 detected and prevented

Return on Investment for security costs using savings from prevented intrusions

6.2.5. Confidential Data Proliferation 机密数据扩散

The number of copies of confidential data should be measured in order to reduce this proliferation.

7. Works Cited / Recommended

7.1. 2. All of the following are desirable security characteristics of database users EXCEPT A:identifiable B:monitored C:efficient D:authorized E:audit 正确答案:C 你的答案:C 解析:与安全无关。4)有效性。如数据符合其定义的语法(格式、类型、范围),则数据有效。

7.2. 3. Data security policies should address all of the following EXCEPT A:physical security B:encryption schemes. C:levels of control for documents D:access speed E:none 正确答案:D 你的答案:D 解析:D是性能指标

7.3. 5. Two data authorization commands available in SQL are __and__ A:Allow; Disallow. B:Grant 授予; Revoke撤回 C:Lock; Unlock. D:Freeze; Release E:Allow; Release 正确答案:B 你的答案:B 解析:SQL基础知识

7.4. 8. Data security policies include all of the following EXCEPT A:role-based access. B:role-based update C:data classification D:data quality requirements E:role-based delete 正确答案:D 你的答案:D 解析:D是数据质量不是数据安全

7.5. 9. ____and___ are two techniques that must be applied by policy to information to eliminate business risk once Data is no longer needed A:Backup recovery B:Declassification redaction解密修订 C:sanitization 清洗; recovery D:Shredding 分解; expungement 消除 E:Sanitization, recovery 正确答案:D 你的答案:A 解析:排除法,业务风险无法恢复与解密。

7.6. 10. The information risk universe 信息风险宇宙 is informed by all of the following EXCEPT A:internal and external audit findings. B:data quality security, privacy and confidentiality issues C:regulatory 监管 non-compliance issues D:non-conformance 不合格 issues (policies. Standards, architecture, and procedures) E:All 正确答案:D 你的答案:A 解析::ABC和风险相关。Architecture and procedures较难涉及风险。

7.7. 13. Knowing how data has been used and abused in the past is an indicator of how it might be ___ and ___ in the future A:available: pursued B:compromised 组成 ; disclosed披露 C:reported; used D:ignored; unused E:reported; unused 正确答案:B 你的答案:E 解析:了解滥用,指导未来如何披露数据