导图社区 1 Quantitative Methods

- 59

- 0

- 1

- 举报

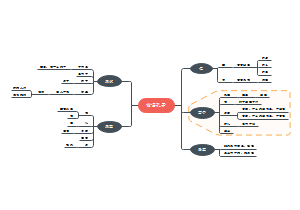

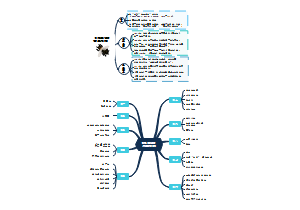

1 Quantitative Methods

根据2023年考纲编写,变化主要为不再以Reading排序, 改动为7个Learning Module;原Reading 1 删除, 原 Reading 2 被分拆改动, 原Reading 5 考纲要求变化

编辑于2023-04-11 16:22:36 上海- 2024cpa会计科目第17章收入、费用和利润

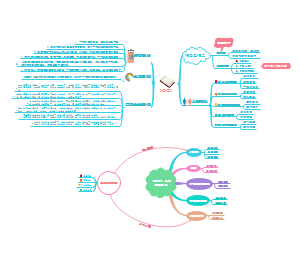

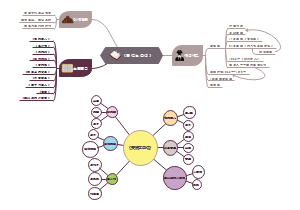

2024cpa会计科目第17章,本章属于非常重要的章节,其内容知识点多、综合性强,可以各种题型进行考核。既可以单独进行考核客观题和主观题,也可以与前期差错更正、资产负债表日后事项等内容相结合在主观题中进行考核。2018年、2020年、2021年、2022年均在主观题中进行考核,近几年平均分值 11分左右。

- 2024cpa会计科目第十二章或有事项

2024cpa会计科目第十二章,本章内容可以各种题型进行考核。客观题主要考核或有资产和或有负债的相关概念、亏损合同的处理原则、预计负债最佳估计数的确定、与产品质量保证相关的预计负债的确认、与重组有关的直接支出的判断等;同时,本章内容(如:未决诉讼)可与资产负债表日后事项、差错更正等内容相结合、产品质量保证与收入相结合在主观题中进行考核。近几年考试平均分值为2分左右。

- 2024cpa会计科目第十一章借款费用

2024cpa会计科目第十一章,本章属于比较重要的章节,考试时多以单选题和多选题等客观题形式进行考核,也可以与应付债券(包括可转换公司债券)、外币业务等相关知识结合在主观题中进行考核。重点掌握借款费用的范围、资本化的条件及借款费用资本化金额的计量,近几年考试分值为3分左右。

1 Quantitative Methods

社区模板帮助中心,点此进入>>

- 2024cpa会计科目第17章收入、费用和利润

2024cpa会计科目第17章,本章属于非常重要的章节,其内容知识点多、综合性强,可以各种题型进行考核。既可以单独进行考核客观题和主观题,也可以与前期差错更正、资产负债表日后事项等内容相结合在主观题中进行考核。2018年、2020年、2021年、2022年均在主观题中进行考核,近几年平均分值 11分左右。

- 2024cpa会计科目第十二章或有事项

2024cpa会计科目第十二章,本章内容可以各种题型进行考核。客观题主要考核或有资产和或有负债的相关概念、亏损合同的处理原则、预计负债最佳估计数的确定、与产品质量保证相关的预计负债的确认、与重组有关的直接支出的判断等;同时,本章内容(如:未决诉讼)可与资产负债表日后事项、差错更正等内容相结合、产品质量保证与收入相结合在主观题中进行考核。近几年考试平均分值为2分左右。

- 2024cpa会计科目第十一章借款费用

2024cpa会计科目第十一章,本章属于比较重要的章节,考试时多以单选题和多选题等客观题形式进行考核,也可以与应付债券(包括可转换公司债券)、外币业务等相关知识结合在主观题中进行考核。重点掌握借款费用的范围、资本化的条件及借款费用资本化金额的计量,近几年考试分值为3分左右。

- 相似推荐

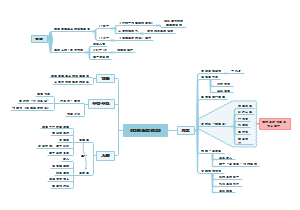

- 大纲

Quantitative Methods

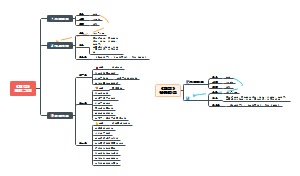

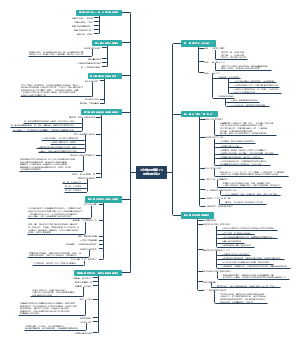

Multiple Regression

Basics of Multiple Regression and Underlying Assumptions

Review of Simple Linear Regression(SLR) (2022年一级新增考点)

Definition: Linear regression with one independent variable

The simple linear regression model

Y: The dependent variable,

X: The dependent variable

b0: Regression coefficients

The error term(residual): the portion of the dependent variable that is not explained by the independent variable(s)

Sum of squares error (SSE) (residual sum of squares)

Ordinary least squares (OLS): a process that estimates the population parameters Bi with corresponding values for which minimize the sum of squared vertical distances between the observations and the regression line

The Basics of Multiple Regression

Definition: Multiple linear regression is used to model the linear relationship between one dependent variable and two or more independent variables

组成部分

The intercept term(b0):the value of the dependent variable when the independent variables are all equal to zero

Partial slope coefficients (bj ): each slope coefficient is the estimated change in the dependent variable for a one unit change in that independent variable, holding the other independent variables constant

Assumptions Underlying Multiple Linear Regression

Linearity: The relationship between the dependent variable and the independent variables is linear

Homoskedasticity: The variance of the regression residuals is the same for all observations

Independence of errors

The observations, pairs of Ys and Xs are independent of one another

The regression residuals are uncorrelated across observations

Normality: The regression residuals are normally distributed, but does not mean that the dependent and independent variables must be normally distributed

Independence of independent variables

Visualize methods

Scatterplots

Detect the linearity between dependent and independent variables

Identify extreme values and outliers

Residual plots: detect violations of homoskedasticity and independence of errors

Normal Q-Q plot(Quantile-Quantile Plots, or simply a Q-Q plot): visualize the distribution of a variable by comparing it to a normal distribution

Evaluating Regression Model Fit and Interpreting Model Results

Goodness of Fit

R^2 and Adjusted R^2

R^2(Coefficient of determination )

定义: measures the fraction of the total variation in the dependent variable that is explained by the independent variable

计算

Problems with using R^2 in multiple regression

Cannot provide information on whether the coefficients are statistically significant

Cannot provide information on whether there are biases in the estimated coefficients and predictions

R^2 will increase simply by adding independent variables,even if the added independent variable is not statistically significant

Adjusted R^2

Calculation(n = number of observations k = number of independent variables)

Adding a new independent variable may either increase or decrease the adjusted R^2

If the coefficient's |t-statistic| >1, increase

If the coefficient's |t-statistic| <1, decrease

AIC and BIC

Testing Joint Hypotheses for Coefficients

Review of Hypotheses Testing(process)

1||| Define Hypothesis

Null hypothesis(the fact we suspect and want to reject,H0) and alternative hypothesis (we want to assess,Ha)

H0: μ = μ0 Ha: μ ≠ μ0

The researcher wants to reject, must include “=”

2||| Choose and Calculate Test statistic

3||| Find Critical value

Critical value: found from the corresponding distribution table of test statistic at given significance level and hypothesis type

Influenced Factors

• Distribution

• Significance level

• One-sided or two-sided Test

4||| Make Decisions

Critical Value Method

Reject H0, if | test statistic | > critical value

Fail to reject H0, if | test statistic | < critical value

P-value

p-value < α: reject H0

p-value > α: do not reject H0

5||| Draw a conclusion

only can say "cannot reject"

形式: ***** is (not) significantly different from ******

The t-test for a single coefficient

Hypothesis

H0: bi = hypothesized of bi

Ha: bi ≠ hypothesized of bi

Test statistic

Decision rule: reject H0 if

|t| > +t(critical)

p-value < significance level(α)

Meaning: Rejection of the null means that coefficient is significantly different from the hypothesized value of bi

The joint F-test

Unrestricted and restricted model(nested model)

Hypothesis

H0: b4 = b5 = 0

Ha: b4 and/or b5 is not equal to zero

Test statistic

q is the difference of number of independet variables between restricted and unrestricted models (in this case,q=5-3=2)

Decision rule: reject H0 if F > F(critical)

Meaning: Fail to reject of the null means that the restricted, more parsimonious model fits the data better than the unrestricted model

The general linear F-test

Hypothesis

H0: b1 = b2 = ⋯ = bk = 0

Ha: At least one coefficient is not equal to zero

Test statistic

Decision rule: reject H0 if F > F(critical)

Meaning:Rejection of the null hypotheses means that at least one coefficient is significantly different from zero

Forecasting Using Multiple Regression

Model Error

Sampling Error

Model Misspecification

Model Specification Errors

Misspecified Functional Form

Violations of regression assumptions

Heteroskedasticity

Definition

The variance of the residuals is not the same across all observations in the sample

Unconditional heteroskedasticity: the heteroskedasticity is not related to the level of the independent variables, which usually causes no major problems with the regression

Conditional heteroskedasticity: heteroskedasticity that is related to the level of the independent variables; does create significant problems for statistical inference

Consequences

Standard errors will be underestimated

T-statistics will be inflated and commit more Type I errors

The F-test is unreliable

Testing (conditional)

Residual scatter plots

The Breusch-Pagen X2 test

H0: No heteroskedaticity ; Ha :Heteroskedaticity

Chi-square test

Decision rule: reject H0 when Chi-square is greater than critical value

CorrectingRobust standard errors(heteroskedasticity-consistent standard errors or White-corrected standard errors)

Serial Correlation

Definition: residual terms are correlated with one another

Positive: a positive/negative error for one observation increases the chance of a positive/negative error for another

Negative: a positive/negative error for one observation increases the chance of a negative/positive error for another

Consequences

The coefficient estimates aren't affected

The standard errors of coefficient are usually unreliable

The F-test is also unreliable

Positive serial correlation: standard errors underestimated and t-statistics inflated, suggesting significance when there is none (type I error)

Negative serial correlation: vice versa (type II error)

Testing

Durbin-Watson(DW) test: is limiting because it applies only to testing for first-order serial correlation

The BG test is more robust because it can detect autocorrelation up to a predesignated order p, where the error in period t is correlated with the error in period t – p

H0 : No serial correlation in the model’s residuals up to lag p Ha : the correlation of residuals for at least one of the lags is different from zero and serial correlation exists up to that order.

The test statistic is approximately F-distributed with n – p – k – 1 and p degrees of freedom, where p is the number of lags

Correcting: The corrections are known by various names, including serial correlation consistent standard errors, serial correlation and heteroskedasticity adjusted standard errors, Newey–West standard errors, and robust standard errors

Multicollinearity

Definition

Occurs when two or more independent variables(or combinations of independent variables) are highly(but not perfectly) correlated with each other

In practice, multicollinearity is often a matter of degree rather than of absence or presence

Consequences

Estimates of regression coefficients become extremely imprecise and unreliable

The standard errors of the slope coefficients are artificially inflated

Diminished t-statistics, so t-tests of coefficients have little power (ability to reject the null hypothesis)

Testing

The situation where t-tests indicate that none of the individual coefficients is significantly different than zero, while the F-test is statistically significant and the R^2 is high

Variance inflation factor (VIF)

Introduction

In a multiple regression, a VIF exists for each independent variable. By regressing one independent variable (Xj) on the remaining k – 1 independent variables, we obtain Rj^2for the regression—the variation in Xjexplained by the other k – 1 independent variables—from which the VIF for Xj is

For a given independent variable, Xj, the minimum VIFj is 1

VIF increases as the correlation increases

Calculation

Decision rule

VIFj > 5 warrants further investigation of the given independent variable.

VIFj >10 indicates serious multicollinearity requiring correction

Correcting

Excluding one or more of the regression variables

Using a different proxy for one of the variables

Increasing the sample size

Extensions of Multiple Regression

Influence Analysis

Definition: Influential observation is an observation whose inclusion may significantly alter regression results

Influential Data Points

A high-leverage point: a data point having an extreme value of an independent variable

An outlier: a data point having an extreme value of an dependent variable

Detecting Influential Points

A high-leverage point

Can be identified using a measure called leverage (hii)

Leverage is a value between 0 and 1, and the higher the leverage, the more distant the observation's value is from the variable's mean

A rule: if an observation's leverage exceeds 3(k+1)/n, then it is a potentially influential observation

An outlier: studentized residuals

Cook's distance or Cook's D (Di)

定义: measures how much the estimated values of the regression change if observation i is deleted from the sample

ei is the residual for observation i k is the number of independent variables MSE is the mean square error of the estimated regression model hii is leverage value for observation i

Key points

It depends on both residuals and leverages so it is a composite measure for detecting extreme values of both types of variables

In one measure, it summarizes how much all of the regression's estimated values change when the ith observation is deleted from the sample

A large Di indicates that the ith observation strongly influences the regression's estimated values

Decision rule

Dummy Variables in a Multiple Linear Regression

Dummy variables

Purpose is to distinguish between "groups" or "categories" of data

Use qualitative variables as independent variables

Takes on a value of 1 if a particular condition is true and 0 if that condition is false

If we want to distinguish among n categories, we need n-1 dummy variables

Types of dummy variables

Intercept dummy

If D=0

If D=1

Slope dummy variables

If D=0

If D=1

Slope and Intercept Dummies

If D=0

If D=1

Multiple Linear Regression with Qualitative Dependent Variables

Linear probability model: It must always be positive but less than 1 (since 0≤ p ≤ 1)

Linear and logit models (logistic regression)

Logit models

It must always be positive

It must be less than 1

Logistic regression assumes a logistic distribution for the error term, and the distributions shape is similar to the normal distribution but with fatter tails

Logistic regression: MLE( maximum likelihood estimation)

The likelihood ratio (LR) test: assess the fit of logistic regression models

Calculation: LR = −2 (Log likelihood restricted model − Log likelihood unrestricted model)

评价

The LR test is a joint test of the restricted coefficients

Rejecting the null hypothesis is a rejection of the smaller, restricted model in favor of the larger, unrestricted model

The LR test is distributed as chi-squared with q degrees of freedom (i.e., number of restrictions)

The LR test performs best when applied to large samples

Log-likelihood metric is always negative, so higher values (closer to 0) indicate a better fitting model

Pseudo-R^2

Time-Series Analysis

Trend models

Different kinds of data

Time series: a set of observations on a variable's outcomes in different time periods

Cross-sectional data: data on some characteristic at a single point in time

Models

Linear trend models

Work well in fitting time series that have constant change amount with time

yt = the value of the time series at time t t = time, the independent variable

Log-linear trend models

Work well in fitting time series that have constant growth rate, whose residuals from a linear trend model will be persistently positive or negative for a period of time

Limitations of Trend Model: The trend model is not appropriate for time series when data exhibit serial correlation

Testing for serial correlation errors: The Durbin-Watson test

H0 : No serial correlation; Ha: Serial correlation

DW ≈ 2×(1−r) r = correlation coefficient between residuals from one period and those from the previous period

Decision rule

Autoregressive (AR) Models

Definition: Uses past values of dependent variables as independent variables

First-order

p-order (p indicates the number of lagged values)

Covariance Stationary (based on ordinary least squares (OLS))

Definition: the time series being modeled is covariance stationary if

Constant and finite expected value: E(yt) = μ

Constant and finite variance: Var(yt) = σ^2

Constant and finite covariance between values at any given lag: cov (y(t), y(t−h) = E(y(t)−μ, y(t−h)−μ) = λ

评价

Stationary in the past does not guarantee stationary in the future.

All covariance-stationary time series have a finite mean-reverting level

Violation of assumptions and detecting

Violation of assumptions

Serially Correlation: Serial correlation: the residuals are correlated instead of being uncorrelated

Autoregressive Conditional Heteroskedasticity (ARCH): the variance of the time series changes overtime

Detecting autocorrelation in an AR model and correction

Step 1: H0: No autocorrelation

Step 2: Use AR(1) model to Compute the autocorrelation of the residual xt = b0 + b1xt-1 + ɛt

Step 3: t-test to see whether the residual autocorrelations differ significantly from 0

Step 4: If the residual autocorrelations differ significantly from 0, the model is not correctly specified, so we may need to modify it

Correction : Add lagged values

Seasonality: Time series that shows regular patterns of movement within the year

Testing

The 4th autocorrelation in case of quarterly data.

The 12th autocorrelation in case of monthly data

Correcting

Quarterly data: xt = b0+b1x(t-1)+ b2x(t-4)+εt

Monthly data: xt = b0+b1x(t-1)+ b2x(t-12)+εt

Mean Reversion

Definition: a tendency to move towards its mean

Calculation of xt (AR(1) model)

The time series will increase if

The time series will decrease if

Covariance stationary → | b1|< 1 in AR(1) model → mean-reversion

Chain Rule of Forecasting

A one-period-ahead forecast for an AR(1) model

A two-period-ahead forecast for an AR(1) model

Model evaluation

In-sample forecasts errors: the residuals within sample period to estimate the model

Out-of-sample forecasts errors: the differences between actual and predicted inflation

Root mean squared error (RMSE) criterion: RMSE is the square root of the average squared error, and the model with the smallest RMSE is judged the most accurate

Reasons of instability of regression coefficients

Financial and economic relationships are inherently dynamic

There is a tradeoff between reliability and stability

Models estimated with shorter time series are usually more stable but less reliable

Moving Average and ARMA model

Moving Average(MA)

An n-period simple moving average(MA): though often useful in smoothing out a time series, may not be the best predictor of the future

A qth-order moving-average model (MA(q))

The order q of a MA model can be determined using the fact that if a time series is a moving-average time series of order q, its first q ARs are nonzero while ARs beyond the first q are zero

The ARs of most autoregressive time series start large and decline gradually, whereas the ARs of an MA(q) time series suddenly drop to 0 after the first q ARs

ARMA model

Limitations

The parameters in ARMA models can be very unstable

Determining the AR and MA order of the model can be difficult

Even with their additional complexity, ARMA models may not forecast well

Random Walks and Unit Roots

Random walks

Simple random walk

Definition: A time series in which the value of the series in one period is the value of the series in the previous period plus an unpredictable random error

A special AR(1) model with b0=0 and b1=1

The best forecast of xt is xt-1

Random walk with a drift

A random walk with the intercept term that not equal to zero (b0 ≠ 0) Xt = b0+Xt-1+εt

Increase or decrease by a constant amount (b0≠0) in each period

Random walk vs Covariance stationary

A random walk will not exhibit covariance stationary as it has an undefined mean reverting level

The least squares regression method doesn't work to estimate an AR(1) model on a time series that actually a random walk

Dickey-Fuller (DF) test for unit root

Unit root

Definition: The time series is said to have a unit root if the lag coefficient is equal to one (b1=1) and will follow a random walk process

Testing for unit root can be used to test for non-stationarity time series, but t-test of the hypothesis that b1=1 in AR model is invalid

Testing of AR model: If autocorrelations at all lags are statistically indistinguishable from zero, the time series is stationary

Steps of DF test

Step 1: start with an AR(1) model: xt=b0+b1xt-1+εt

Step 2: subtract xt-1from both sides: xt- xt-1 = b0 + (b1 –1)xt-1 + εt Let xt-xt-1 =b0+g1*xt-1+εt where g1=b1-1

Step 3: test if g1=0. H0: g1=0; Ha: g1<0

Decision rule

If fail to reject H0, there is a unit root and the time series is non-stationary

If we can reject the null, the time series does not have a unit root and is stationary

First differencing

Purpose: to transform a time series that has a unit root to a covariance stationary time series

Steps

Step 1: Subtract xt-1 from both sides of random walk model: xt-xt-1=xt-1-xt-1+εt=εt

Step 2: Define yt=xt- xt-1, so yt =εt or yt=b0+εt

Consequence: yt is covariance stationary variable with a finite mean-reverting level of 0/(1-0)=0 or b0 /(1-0)=b0

Autoregressive Conditional Heteroskedasticity(ARCH)

Definition: ARCH is conditional heteroskedasticity in AR models. When ARCH exists, the standard errors of the regression coefficients in AR models are incorrect, and the hypothesis tests of these coefficients are invalid

ARCH(1) model

ut is the error item

If the coefficient a1 is statistically significantly different from 0, the time series is ARCH(1)

If a time series model has ARCH(1) errors, generalized least squares must be used to develop a predictive model

Predicting variance with ARCH models

Regressions with More than One Time Series

DF test

If none of the time series has a unit root, linear regression can be safely used

If only one time series has a unit root, linear regression can not be used

If both time series have a unit root

If the two series are cointegrated, linear regression can be used

If the two series are not cointegrated, linear regression can not be used

Cointegration

Definition: two time series have long-term financial or economic relationship so that they do not diverge from each other without bound in the long run

Dickey-Fuller Engle-Granger test (DF-EG test)

H0: no cointegration Ha: cointegration

If we cannot reject the null, we cannot use multiple regression

If we can reject the null, we can use multiple regression

Fin-tech

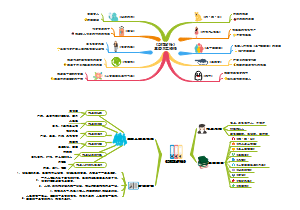

Machine Learning

Introduction to Machine Learning

Overview

Definition: Machine learning comprises diverse approaches by which computers are programmed to improve performance in specified tasks with experience

Implications for investment management

It can appear to be opaque or "black box" approaches, which arrive at outcomes that may not be entirely understood or explainable

Statistical vs. Machine Learning

Classification

Supervised Learning

The classification of the output data is labelled in advance, containing matched sets of observed inputs and the associated output

Two categories of problems

Regression problem: the target variable is continuous

Classification problem: target variable is categorical or ordinal (i.e., a ranked category)

Unsupervised Learning

Does not make use of labeled data and is useful for exploring new datasets

Deep Learning and Reinforcement Learning

Comparison

Deep learning nets (DLNs)

Neural networks: consist of nodes, neurons, connected by links, and have three types of layers: an input layer, hidden layers, and an output layer

Deep learning nets: neural networks with many hidden layers (at least 3 but often more than 20 hidden layers)

Steps of DLNs operation

Step 1: take a set of inputs from a feature set (the input layer)

Step 2: pass the inputs to the first hidden layer of neurons with different weights, each of which usually produces a scaled number in the range (0, 1) or (-1, 1)

Step 3: pass the scaled number to the next hidden layer until the output layer produces a set of probabilities of the observation being in any of the target categories

Step 4: assigns the observation to category with the highest probability

Step 5: during training, the weights are determined to minimize a specified loss function

Evaluation of Performance

The dataset is typically divided into three non-overlapping samples

Training sample used to train the model, "in-sample"

Validation sample for validating and tuning the model

Test sample (out-of-sample) for testing the model's ability to predict well on new data

Three types of models

Definitions

Underfitting: a model does not capture the relationships in the data

Overfitting: a model to such a degree of specificity to the training data that the model begins to incorporate noise coming from quirks or spurious correlations

Generalization(an objective): a model retains its explanatory power when predicting using new data (out-of-sample)

Errors

In-sample errors: errors generated by the predictions of the fitted relationship relative to actual target outcomes on the training sample

Out-of-sample errors: errors from either the validation or test samples

Bias error: the degree to which a model fits the training data (underfitting and high in-sample error)

Variance error: how much the model's results change in response to new data from validation and test samples (overfitting and high out-of-sample error)

Base error: due to randomness in the data

Curves to describe errors

Learning curve: plots the accuracy rate (1-error rate) in the validation or test samples against the amount of data in the training sample, which is useful for describing underfitting and overfitting as a function of bias and variance errors

Fitting curve: plots in-sample and out-of-sample error rates against model complexity

Supervised Learning

Penalized Regression

Definition: the regression coefficients are chosen to minimize the sum of squared residuals (SSE) plus a penalty term that increases in size with the number of included feature

Penalty term

Support vector machine (SVM)

K-nearest neighbor (KNN)

Classification and regression tree (CART)

Random Forest

Unsupervised Learning

Principal components analysis (PCA)

K-means clustering

Hierarchical clustering

Big Data Projects

Introduction

Sources of big data

Traditional sources

Financial markets

Businesses

Governments

Non-traditional sources

Individuals

Sensors

Internet of Things (IoT)

Types

Structured data: data that has been organized into a formatted repository

Unstructured data: data in many different forms that doesn't fit to conventional data models

Characteristics (4Vs)

Volume

Variety

Velocity

Veracity: credibility and reliability of different data sources

Steps in project

Data Preparation and Wrangling

Definitions

Data preparation (cleansing): the process of examining, identifying, and mitigating errors in raw data

Data wrangling (preprocessing): performs transformations and critical processing steps on the cleansed data to make the data ready for ML model training

Possible errors of data

Incompleteness error (missing data)

Invalidity error: outside of a meaningful range

Inaccuracy error: not a measure of true value

Inconsistent error: conflict with the corresponding data points or reality

Non-uniformity error: not present in an identical format

Duplicate error

Data wrangling

Data transformation

Extraction

Aggregation: consolidating two related variables into one

Filtration: removing irrelevant observations

Selection: which involves removing features

Conversion of data of diverse types

Outliers

Criteria for determining outliers

In general, a data value that is outside of 3 standard deviations from the mean

Data values outside of 1.5 IQR are considered as outliers and values outside of 3 IQR as extreme values

Approach to removing outliers

Trimming

Winsorization:be replaced by the maximum value allowable for that variable

Scaling

Normalization:the process of rescaling numeric variables in the range of [0, 1]

Standardization:the process of both centering and scaling the variables

Preparation for unstructured(text) data

Text Preparation(Cleansing): remove

HTML tags (a regular expression, regex)

Punctuations

Numbers

White spaces

Text wrangling(preprocessing): tokens and tokenization

Text normalization

Lowercasing

Stop words do not carry a semantic meaning and can be removed (e.g., "the", "is" and "a"

Stemming: convert inflected form of a word into its base word

Lemmatization: convert inflected forms of a word into its morphological root

Creation of bag-of-words (BOW): simply collects all the words or tokens without regard to the sequence of occurrence

Building of document term matrix (DTM)

Definition: a matrix that each row belongs to a document (or text file) and each column represents a term (or token)

Data Exploration

Exploratory data analysis (EDA)

Definition: summarize and observe data with exploratory graphs, charts, and other visualization

Structured data

Unstructured (text) data

Text classification: classify texts into different classes with supervised ML approaches

Topic modeling: group the texts into topic clusters with unsupervised ML approaches

Sentiment analysis: predicts sentiment of the texts using both supervised and unsupervised approaches

Feature selection

Definition: a process of selecting only pertinent features from the dataset for ML model training

Structured data

Statistical measures includes correlation coefficient and information-gain measures (i.e., R-squared value from regression analysis)

The features can then be ranked using this score and either retained or eliminated from the dataset

Unstructured (text) data

Frequency measures: remove noise features

Chi-square test: test the independence of two events

Mutual information (MI)

The MI value will be equal to 0 if the tokens distribution in all text classes is the same

The MI value approaches 1 as the token in any one class tends to occur more often in only that particular class of text

Feature engineering

Definition: a process of creating new features by changing or transforming existing features (E.g., combine the "EPS" and "price" to produce anew feature "P/E"

Unstructured (text) data

Numbers

N-grams: identify discriminative multi-word patterns and keep them intact

Name entity recognition (NER): an extensive procedure available as a library or package

Part of speech (POS): tag every token in the text with a corresponding part of speech

概要

Model Training

Method selection: decide which ML method(s) to incorporate

Supervised or unsupervised learning

Type of data

Size of data

Performance evaluation: quantify and understand a model's performance

Binary classification models

Error analysis

Confusion matrix

Calculation of ratios

Additional two metrics

Precision (P)= TP/(TP+FP)

Recall(R)= TP/(TP+FN)

Two overall performance metrics

Accuracy = (TP + TN)/(TP + FP + TN + FN)

F1 score: the harmonic mean of precision and recall F1 score = (2 × P × R)/(P + R)

Receiver operating characteristic (ROC) curve

False positive rate (FPR)=FP/(TN+FP)

True positive rate (TPR)=TP/(TP+FN)

Continuous data prediction: Root mean squared error (RMSE)

Tuning: undertake decisions and actions to improve model performance

Parameters: learned from the training data as part of the training process, and dependent on the training data

Hyperparameters: manually set and tuned, and not dependent on the training data